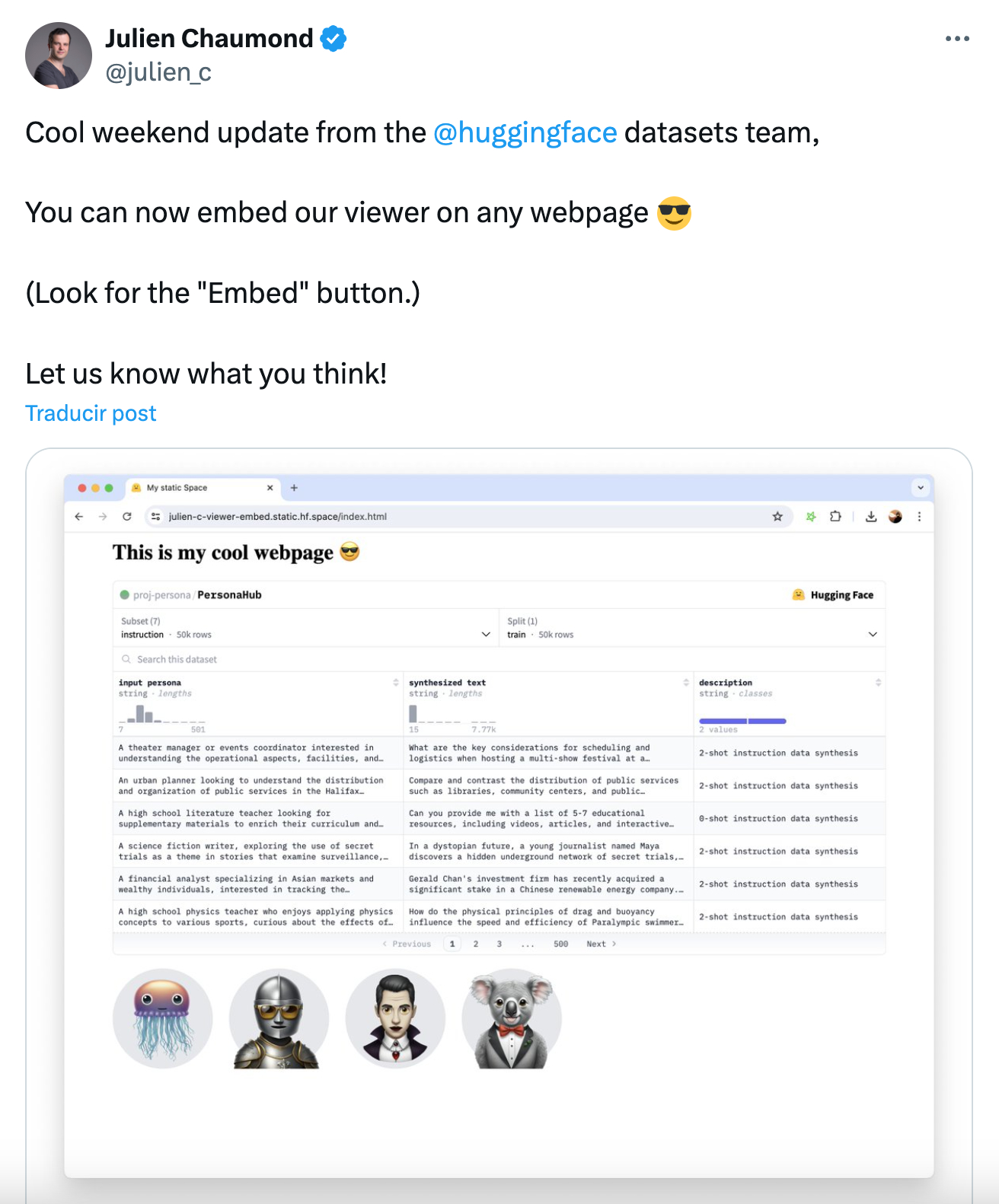

Yesterday, Alex pointed me to this tweet from Julien Chaumond, CTO of Huggingface:

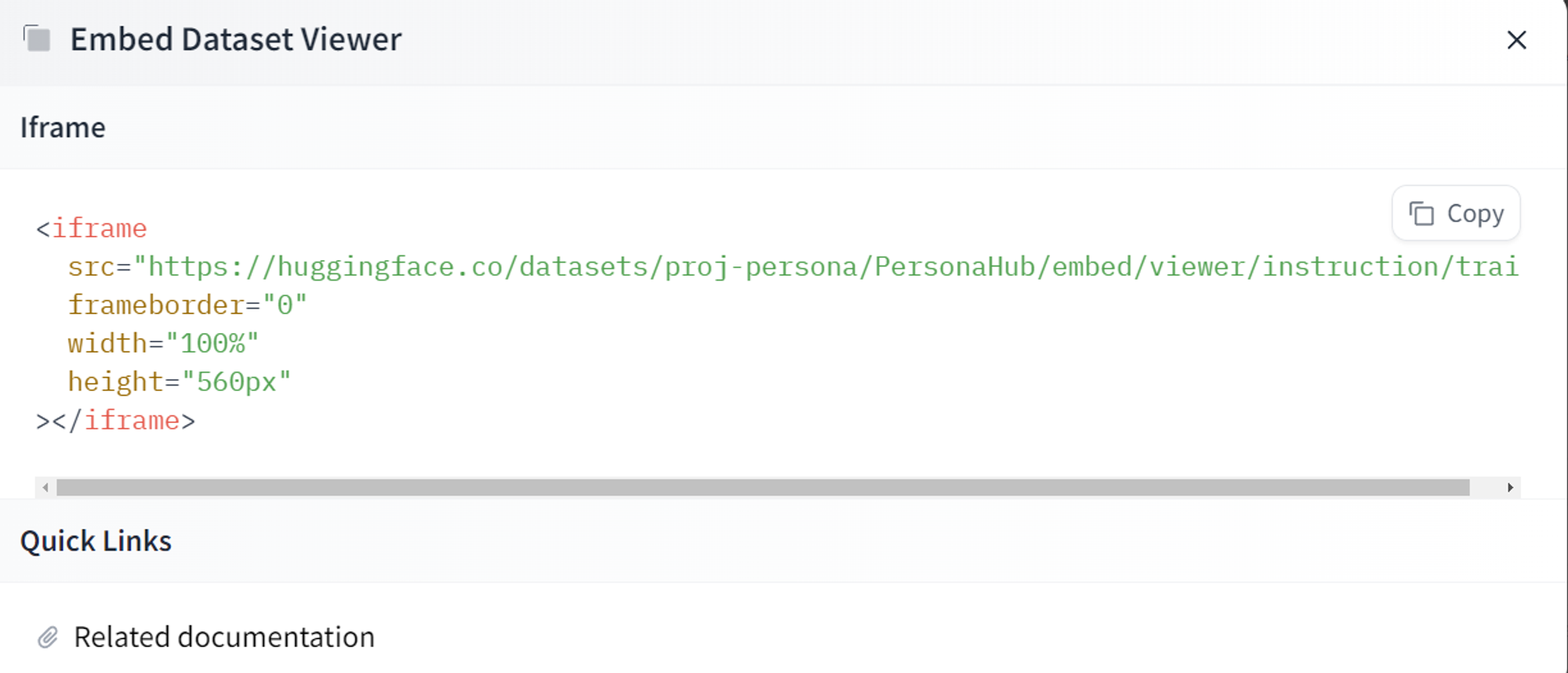

We instantly thought it would be a good idea to embed the visualization in the ZenML dashboard. As the 🤗 Huggingface team already exposed this embedding functionality as a simple iframe, we could easily do this:

See an example on any 🤗 Huggingface dataset

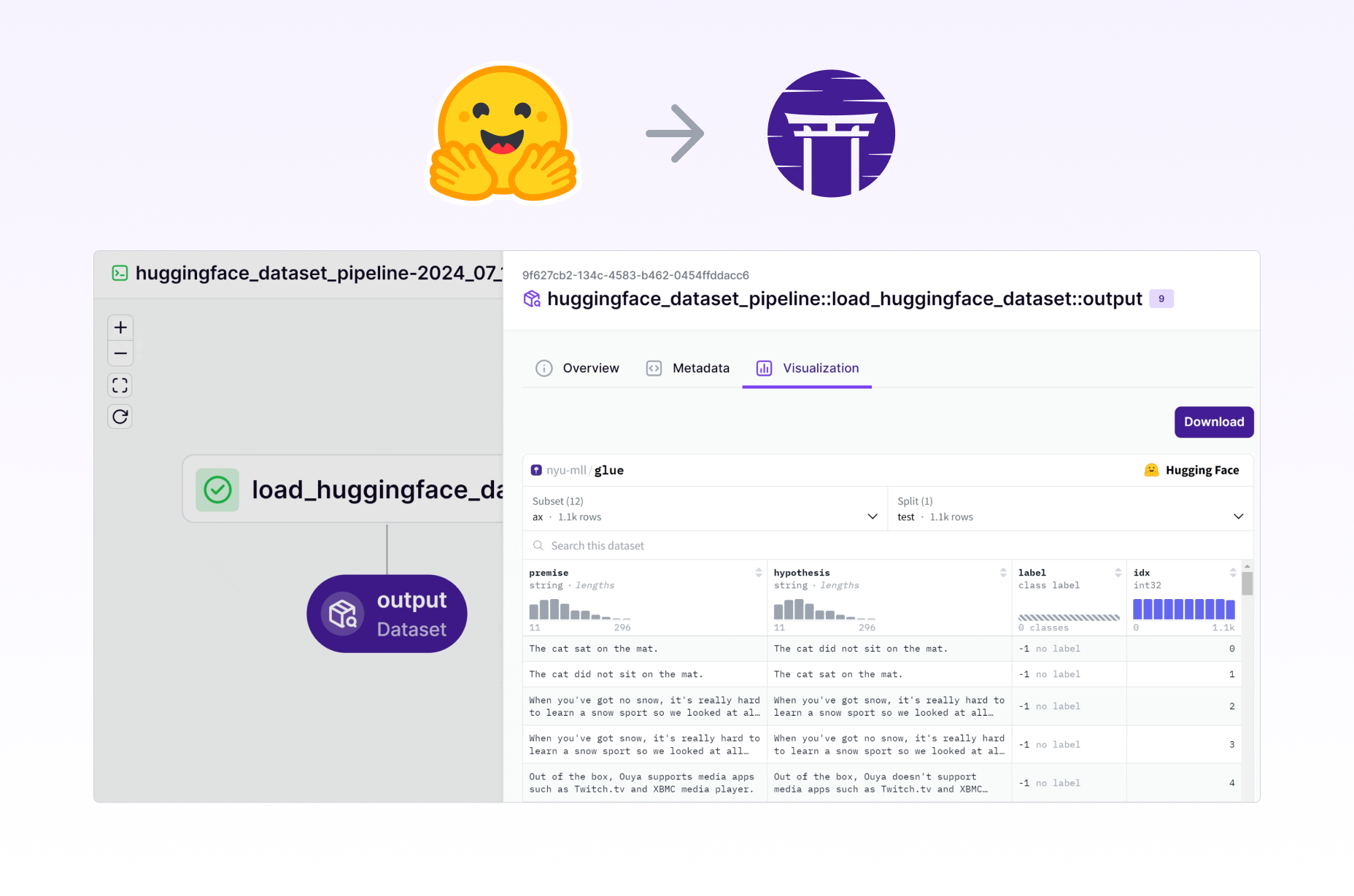

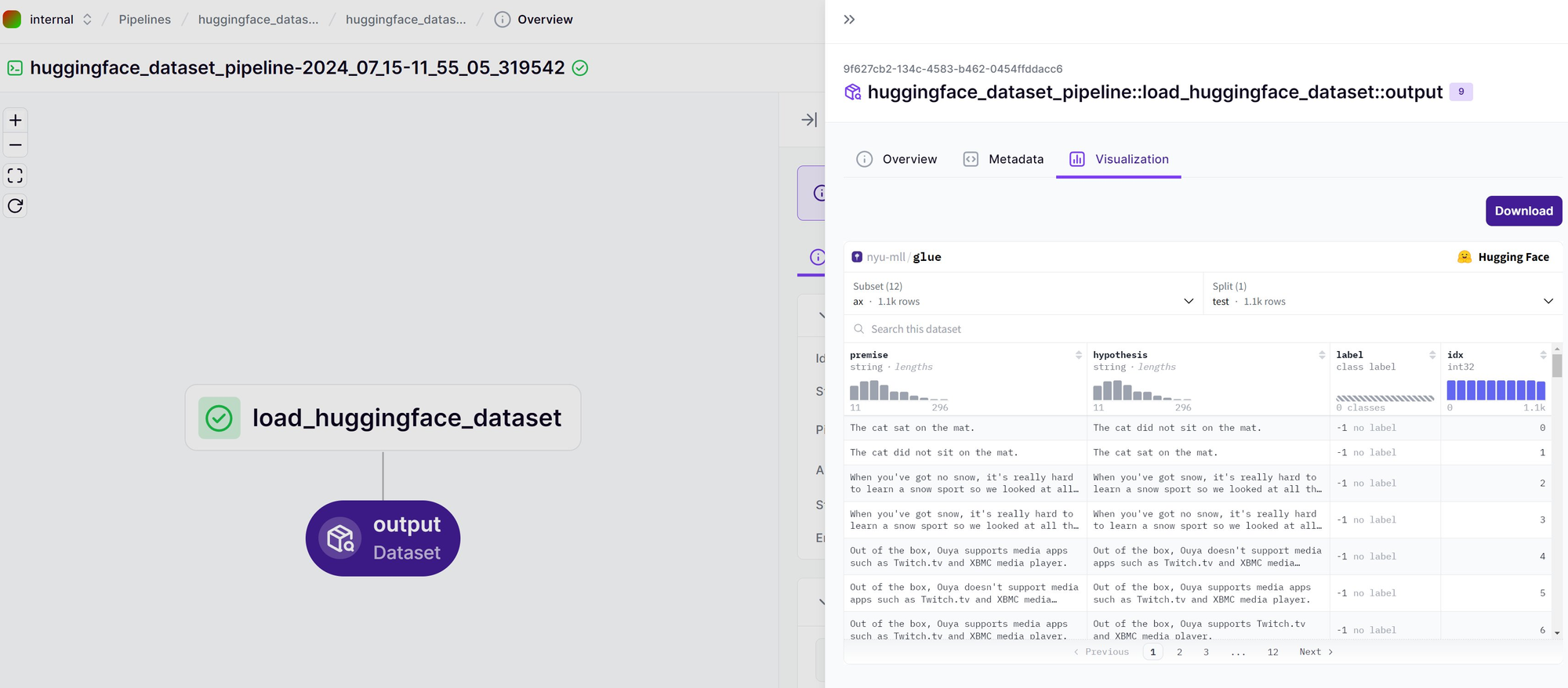

Within a few hours, we had it reviewed and merged:

🏃 Custom visualizations in ZenML

In ZenML, there is a concept known as a materializer, that takes care of persisting objects to and from artifact storage. The interface is quite simple, and optionally includes a function where users can attach custom visualizations:

The materializer interface is extensible, and it’s easy to make custom ones by adding a class to your codebase. For 🤗 Huggingface datasets, there is already a standard materializer that takes care of reading and writing a dataset to and from storage. All that needed to be done was to implement the save_visualizations function.

📢 Note, there are other ways to create custom visualizations in ZenML, but this was the simplest in this case

The save_visualizations function expects us to return a dictionary of key-value pairs, where the key is where the visualization file is stored, and the value is the type of file that we persist. ZenML already supports HTML file types, so the logic was fairly simple. Here is the implementation:

You can see the full implementation materializer implementation here.

And that’s that! Now by returning any 🤗 Huggingface dataset from a ZenML step in a pipeline, the materializer would also embed the viewer within the ZenML dashboard viewer.

How to embed a 🤗 dataset view in ZenML

Here is a simple example in action that embeds the glue dataset:

Run the above from version 0.62.0 onwards, and you’ll see the following in the ZenML dashboard:

This was a fun two hours to spend on this relatively simple but hopefully popular enhancement to the ZenML Huggingface integration. Give us a star if you like it, or say hi on Slack! Till next time.