Last updated: November 21, 2022.

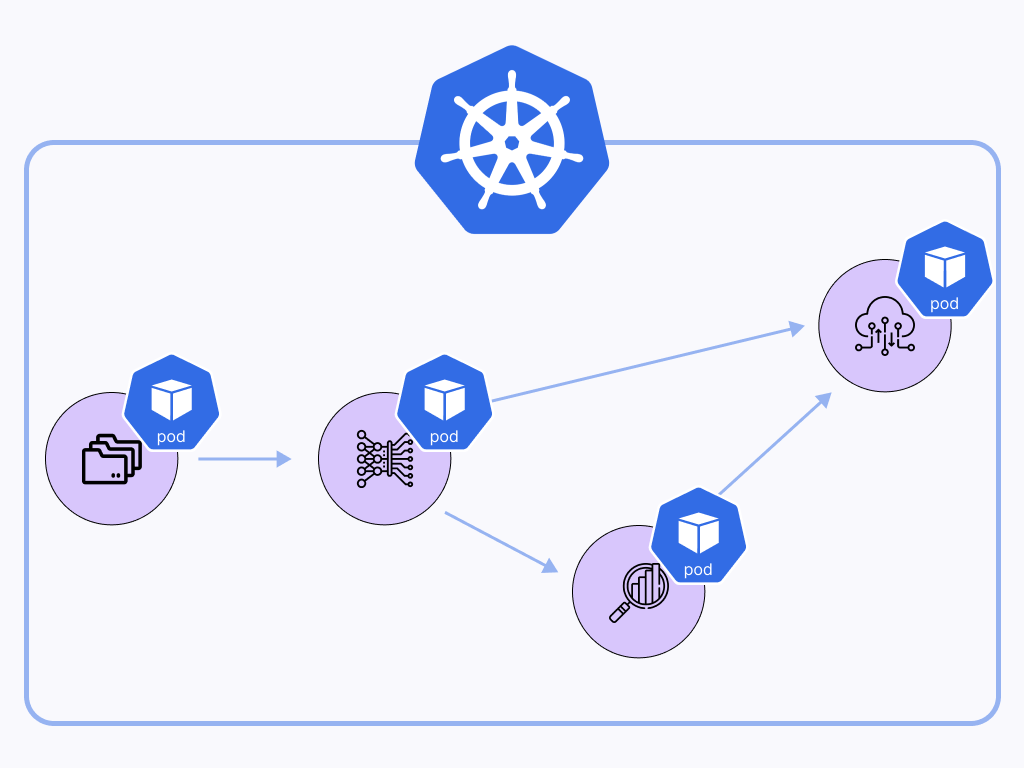

Orchestrating ML workflows natively in Kubernetes has been one of the most requested features at ZenML. We have heard you and have just released a brand new Kubernetes-native orchestrator for you, which executes each pipeline step in a separate pod, streams the logs of all pods to your terminal, and even supports CRON job scheduling.

Now, why would we want to orchestrate ML workflows natively in Kubernetes in the first place when ZenML already integrates with Kubeflow, which can run jobs on Kubernetes for us? Well, Kubeflow is an awesome, battle-tested tool, and it certainly is the most production-ready Kubernetes orchestration tool out there. However, Kubeflow also comes with a lot of additional requirements and general added complexity that not every team might want:

- Kubeflow Pipelines (kfp) alone requires a list of 21 other packages aside from Kubernetes

- It includes a UI that you might not need as well as a lot of Google Cloud specific functionality that is essentially dead code if you are using a different cloud provider.

- Most importantly, someone must install it on your cluster, configure it, and actively manage it.

If you are looking for a minimalist, lightweight way of running ML workflows on Kubernetes, then this post is for you: By the time we are done, you will be able to orchestrate ML pipelines on Kubernetes without any additional packages apart from the official Kubernetes Python API.

Best of all, if you build out the stack described in this tutorial and then later want to switch to a Kubeflow setup, it will be as easy as changing the orchestrator in your ZenML stack with a single line of code, so you are not locked into anything!

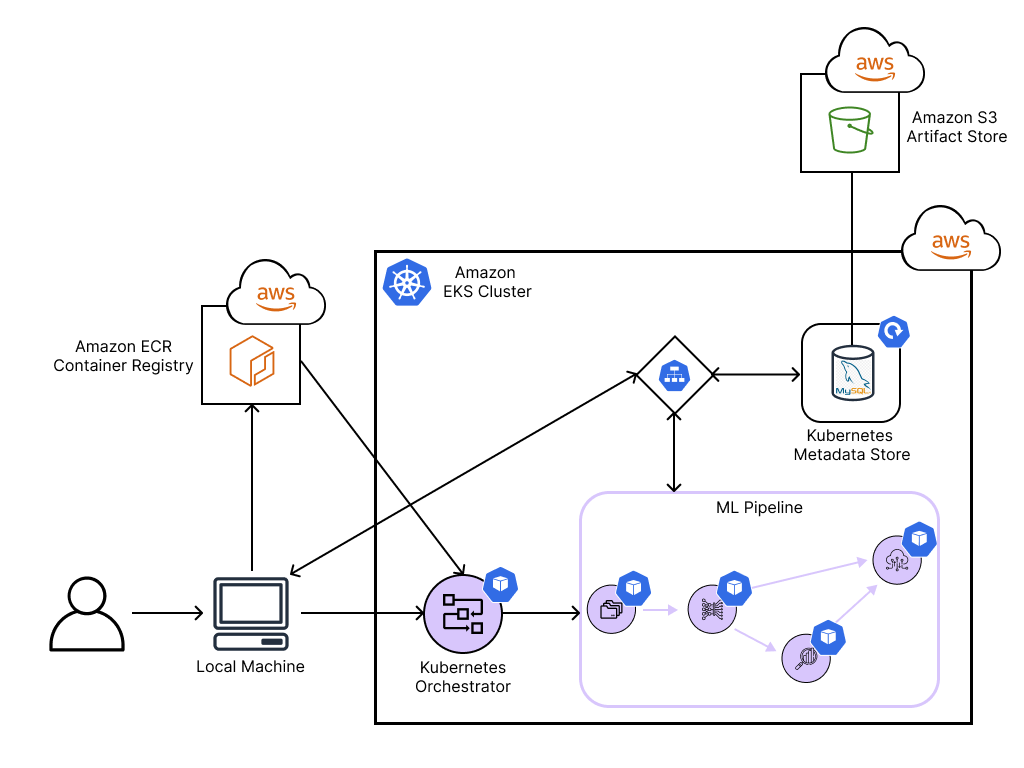

So, let’s get into it and use the new Kubernetes-native orchestrator to easily run ML workflows in a distributed and scalable cloud setting on AWS. To do so, we will provision various resources on AWS: an S3 bucket for artifact storage, an ECR container registry, as well as an Amazon EKS cluster, on which the Kubernetes-native components will run.

The following figure shows an overview of the MLOps stack we will build throughout this tutorial:

Setting Up AWS Resources

In this example, we will use AWS as our cloud provider of choice and provision an EKS Kubernetes cluster, an S3 bucket to store our ML artifacts, and an ECR container registry to manage the Docker images that Kubernetes needs. However, this could also be done in a similar fashion on any other cloud provider.

In this tutorial, we provide two methods on how to provision infrastructure. Choose the one that you are more comfortable with!

Requirements

In order to follow this tutorial, you need to have the following software installed on your local machine:

- Python (version 3.7-3.9)

- Docker installed on your machine

- kubectl

- AWS CLI installed on your machine and authenticated

- Remote ZenML Server A Remote Deployment of the ZenML HTTP server and Database

🚅 Take the Express Train: Terraform-based provisioning of resources

If you’re looking for a really quick way to have all the resources deployed and ready, we have something interesting for you! We are building a set of “recipes” for the most popular MLOps stacks so that you can get to the execution phase faster.

Take a look at mlops-stacks by ZenML 😍. It’s open-source and maintained by the ZenML core team.

Now, coming back to the setup, you can leverage the “eks-s3-seldon-mlflow” recipe for this example.

Note You need to have Terraform installed to continue.

Follow these steps and you’ll have your stack ready to be registered with ZenML!

- Clone the repository.

- Move into the “eks-s3-seldon-mlflow” directory and open the locals.tf file.

- Edit the “prefix” variable to your desired name and modify any other variables if needed.

- Now, run the following command.

This will download some provider information to your repository and can take up to a minute to run.

5. After the init has completed, you can now apply your resources. For that, execute this command.

This will give you an overview of all resources that will be created. Select “yes” and just sit back 😉 It can take up to 20 minutes to set everything up.

6. Your stack is now ready! 🚀

Now you can skip directly to configuring your local Docker and kubectl! 😎

🚂 Take the Slower Train: Manual provisioning of resources

If you would like to manually provision the resources instead of using the Terraform-based approach described above, this section is for you. You can skip this section if you already have the resources deployed at this point.

EKS Setup

First, create an EKS cluster on AWS according to this AWS tutorial.

S3 Bucket Setup

Next, let us create an S3 bucket where our ML artifacts can later be stored. You can do so by following this AWS tutorial.

The path for your bucket should be in this format s3://your-bucket.

Now we still need to authorize our EKS cluster to access the S3 bucket we just created. For simplicity, we will do this by simply assigning an AmazonS3FullAccess policy to the cluster node group’s IAM role.

ECR Container Registry Setup

Since each Kubernetes pod will require a custom Docker image, we will also set up an ECR container registry to manage those. You can do so by following this AWS tutorial.

Configure your local kubectl and Docker

All that is left to do is to configure your local kubectl to connect to the EKS cluster and to authenticate your local Docker CLI to connect to the ECR.

Note

If you have used Terraform above, you can obtain the values needed for the following commands by looking at the output of the terraform apply command.

Remote ZenML Server

For Advanced use cases where we have a remote orchestrator such as Vertex AI or to share stacks and pipeline information with team we need to have a separated non local remote ZenML Server that it can be accessible from your machine as well as all stack components that may need access to the server. Read more information about the use case here

In order to achieve this there are two different ways to get access to a remote ZenML Server.

- Deploy and manage the server manually on your own cloud/

- Sign up for ZenML Enterprise and get access to a hosted version of the ZenML Server with no setup required.

Run an example with ZenML

Let’s now see the Kubernetes-native orchestration in action with a simple example using ZenML.

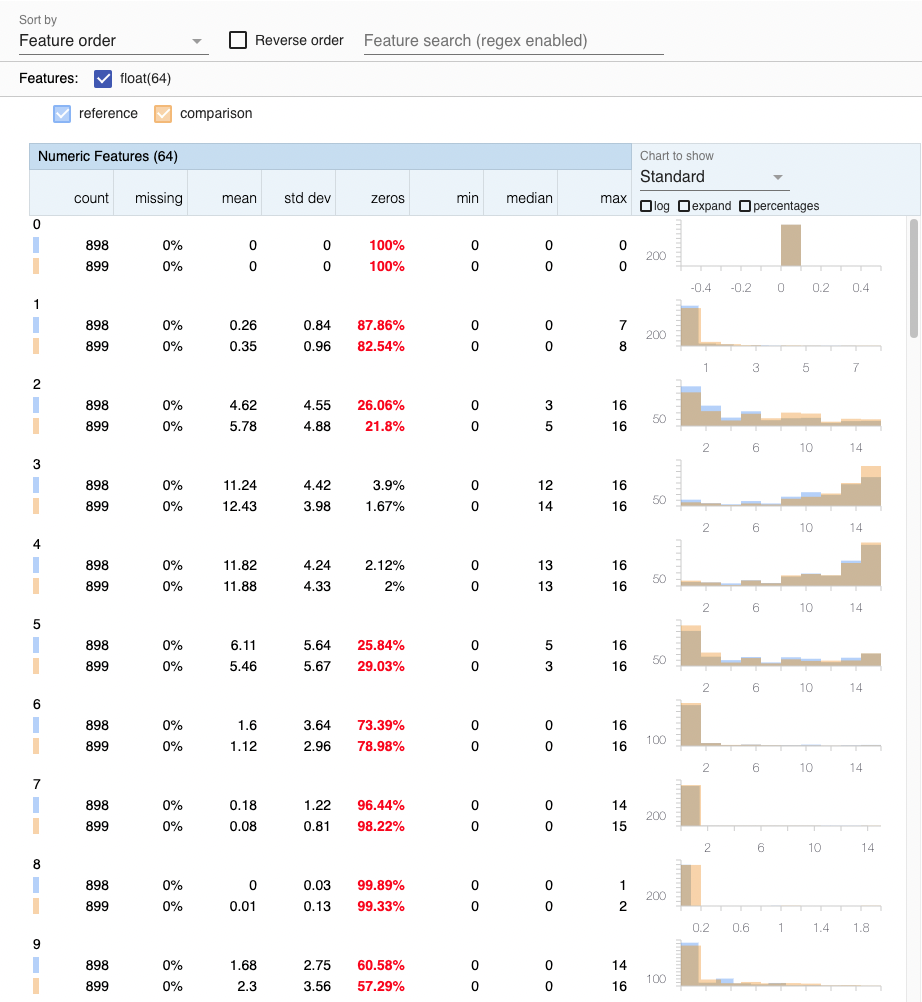

The following code defines a four-step pipeline that loads NumPy training and test datasets, checks them for training-serving skew with Facets, trains a sklearn model on the training set, and then evaluates it on the test set:

In order to run this code later, simply copy it into a file called run.py.

Next, install zenml, as well as its sklearn, facets, kubernetes, aws, and s3 integrations:

Registering a ZenML Stack

To bring the Kubernetes orchestrator, and all the AWS infrastructure together, we will register them together in a ZenML stack.

First, initialize ZenML in the same folder where you created the run.py file:

Next, register the Kubernetes orchestrator, using the <KUBE_CONTEXT> you used above:

Similarly, use the <ECR_REGISTRY_NAME> and <REMOTE_ARTIFACT_STORE_PATH> you defined when setting up the ECR and S3 components to register them in ZenML. If you have used the recipe, the ECR registry name would be in the format .dkr.ecr..amazonaws.com and the name of the artifact store can be taken from the output of the `terraform apply` command.

Now we can bring everything together in a ZenML stack:

Let’s set this stack as active, so we use it by default for the remainder of this tutorial:

Spinning Up Resources

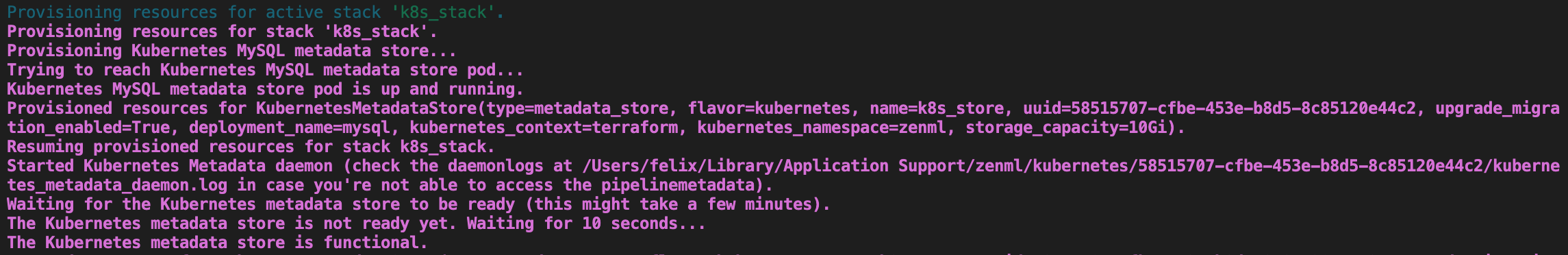

Once all of our components are defined together in a ZenML stack, we can spin them all up at once with a single command:

If everything went well, you should see logs messages similar to the following in your terminal:

Running the Example

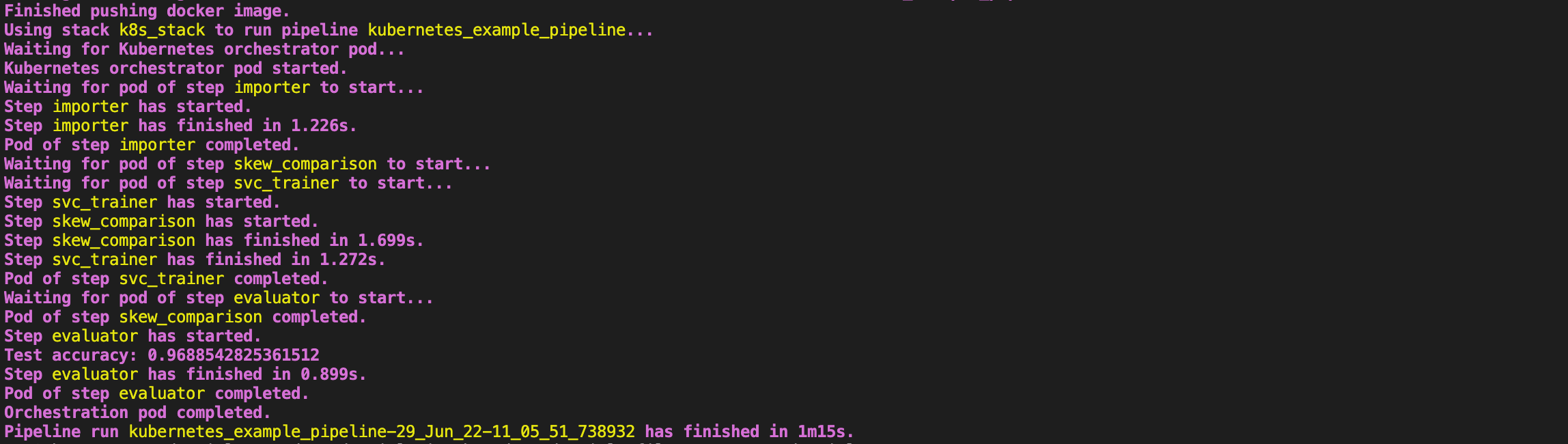

Having all of our MLOps components registered in a ZenML stack makes it now trivial to run our example on Kubernetes in the cloud. Simply execute the following command:

This will first build a Docker image locally, including your ML pipeline code from run.py, then push this to the ECR, and then execute everything on the EKS cluster.

If all went well, you should now see the logs of all Kubernetes pods in your terminal, similar to what is shown below.

Additionally, a window should have opened in your local browser where you can see a training-serving skew analysis in Facets like the following:

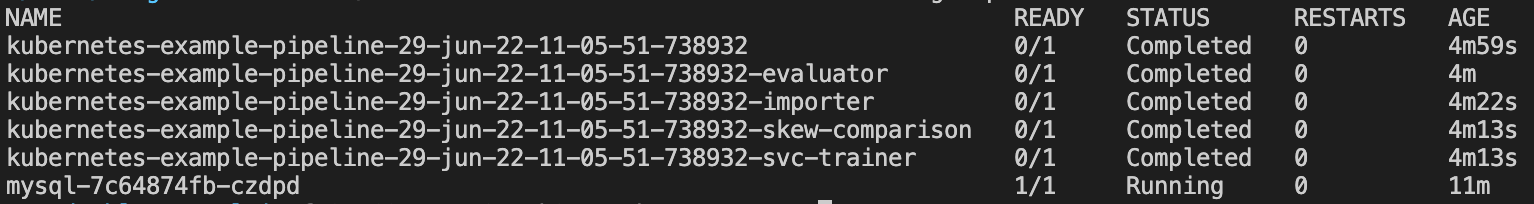

When running kubectl get pods -n zenml, you should now also be able to see that a pod was created in your cluster for each pipeline step:

Cleanup

Delete Example Run Pods

If you just want to delete the pods created by the example run, execute the following command:

Delete AWS Resources

Lastly, if you even want to deprovision all of the infrastructure we created, simply delete the respective resources in your AWS console if you had created them manually.

For those of you who used the stack recipe, you can simply run the following command and it will take care of removing all resources and dependencies.

Conclusion

In this tutorial, we learned about orchestration on Kubernetes, set up EKS, ECR, and S3 resources on AWS, and saw how this enables us to run arbitrary ML pipelines in a scalable cloud environment. Using ZenML, we were able to do all of this without having to change a single line of our ML code. Furthermore, it will now be almost trivial to switch out stack components whenever our requirements change.

If you have any questions or feedback regarding this tutorial, join our weekly community hour.

If you want to know more about ZenML or see more examples, check out our docs and examples or join our Slack.

%202.png)