Your team has developed a breakthrough machine learning model with impressive metrics in your development environment. Stakeholders are excited about the potential impact. But now comes the truly challenging part—bridging the gap between that promising prototype and a reliable production tool that end users can trust with real-world decisions.

Why ML Deployment Is Uniquely Challenging

Data scientists who excel at creating sophisticated algorithms often find themselves navigating unfamiliar territory when it comes to deployment. These hurdles typically involve model serving, monitoring, and governance:

- Reproducibility problems: When deployment involves manual handoffs between data scientists and IT teams, subtle inconsistencies between environments lead to unexplained performance degradation when real-world data is introduced.

- Versioning complexity: Without systematic tracking mechanisms, teams may unknowingly use outdated models or different departments may rely on varying versions of the same model.

- Audit requirements: Regulated industries require comprehensive audit trails that can trace every prediction back to its training data, code, and validation processes—something most manual workflows can't support.

Enterprise Solutions: Powerful but Complex

The industry has responded with sophisticated model deployment platforms: tools like Seldon Core, BentoML, and hyperscaler solutions like SageMaker, Vertex AI, and Azure ML. These platforms offer powerful capabilities but introduce critical trade-offs:

- Specialized Expertise Requirements: Demands dedicated DevOps/MLOps skills beyond core data science teams.

- Infrastructure Complexity: Involves Kubernetes orchestration, security hardening, and networking configuration - or alternatively, hyperscaler ecosystem dependencies that limit multi-cloud flexibility.

- Learning Curve and Cost Considerations: Substantial setup time for Kubernetes-based solutions contrasts with managed platforms' rapid onboarding but hidden compliance complexities and ongoing expenses.

For many organizations, these enterprise solutions theoretically address deployment needs but create practical obstacles that impede the iterative development cycles essential to ML's value proposition.

Finding a Practical Middle Ground in the MLOps Spectrum

What if you could avoid most of the infrastructure burden while still gaining benefits like reproducibility, automation, and traceable model behavior? This is where frameworks like ZenML can bridge the gap by offering structured, reproducible ML workflows without requiring a full DevOps overhaul.

The key is integration with existing deployment platforms through components that allow you to deploy models locally or in production, track services, and manage model servers via Python or CLI—all while maintaining consistent model tracking, versioning, and promotion logic regardless of where you deploy.

This flexible integration approach allows teams to leverage existing tools and platforms while maintaining a consistent workflow structure and artifact lineage.

Model Handover to Production Service

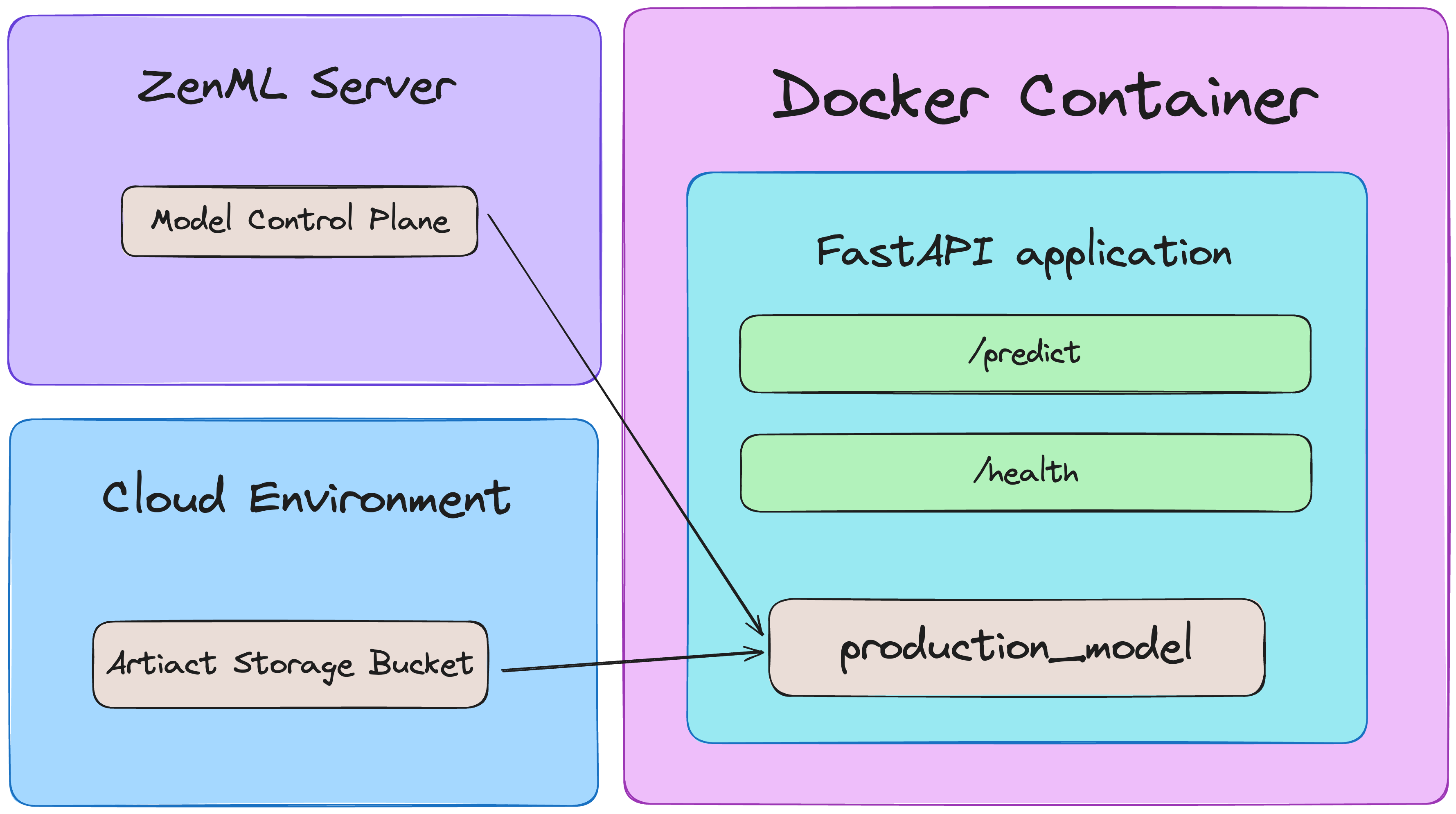

A key decision in ML deployment is how your production service accesses the specific model version that your pipeline selected. ZenML orchestrates model selection, but your serving infrastructure doesn't need ZenML access at runtime. This separation creates a clean boundary between environments. Here are two ways to achieve this decoupling:

Bake-In Approach: Embedding the Model in the Image

Best for: Smaller models where image size isn't a major concern and updates don't happen extremely frequently (as each model update requires a new image build).

In this approach, your deployment pipeline gets the approved model artifact as it builds the image and embeds it directly inside the Docker image. At runtime, your FastAPI service simply loads this pre-packaged model from a predefined local path (no ZenML connection required), which results in a completely self-contained deployment unit.

Volume-Mount Approach: Externalizing the Model

Best for: Large models (to keep image sizes down) or scenarios requiring frequent model updates without rebuilding the entire service image.

For larger models or scenarios requiring frequent updates, the volume-mount approach puts the model artifact on a host-accessible storage location. Your container then mounts this location at runtime and the service can access the model without embedding it in the image. This allows for model swapping without container rebuilds and works well for models too big to package in containers.

Choosing the Right Approach

Both approaches have the same benefits: they use ZenML for end-to-end versioning and artifact tracking during training, and maintain the same clean runtime separation from your development infrastructure. For many teams, starting with the bake-in pattern provides the simplest path to production while the volume-mount pattern is a natural evolution as model complexity grows.

Let's now look at how to implement the deployment pipeline itself using ZenML steps. The following examples illustrate the core structure, which can be adapted to fit either the bake-in or volume-mount pattern depending on your chosen strategy.

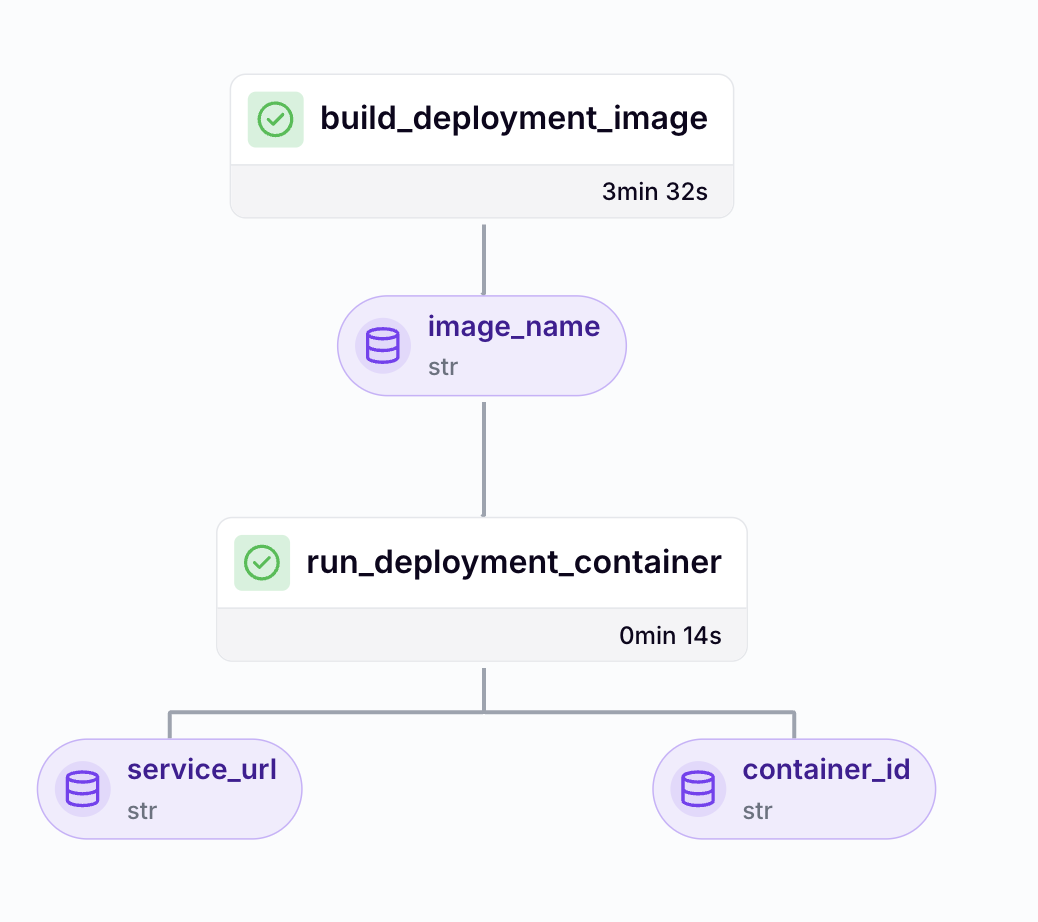

Deployment as a Pipeline: How It Works in Practice

At the heart of this approach is a deployment pipeline powered by automatic tracking infrastructure. Each deployment run is versioned, reproducible, and fully traceable with complete logs of inputs, outputs, environment variables, and pipeline context.

Let's walk through a simplified deployment pipeline that builds a Docker image and runs a FastAPI service based on a promoted model:

Step 1: Building a Deployment Image

We define a pipeline step that packages our model, preprocessing logic, and service code into a self-contained Docker image:

This step ensures the image is versioned and tied to the exact model and preprocessing artifacts used during training.

Step 2: Running the Container

The next step spins up the containerized service:

This step logs deployment metadata (container ID, URL, timestamp) into ZenML, creating a traceable snapshot of what was deployed, when, and from where.

Tying It All Together in a Deployment Pipeline

Implementing Model Promotion Systems

While deploying models is critical, equally important is determining which models should reach production in the first place. In traditional ML workflows, this decision often relies on informal team discussions or manual comparisons—introducing unnecessary risk for critical applications.

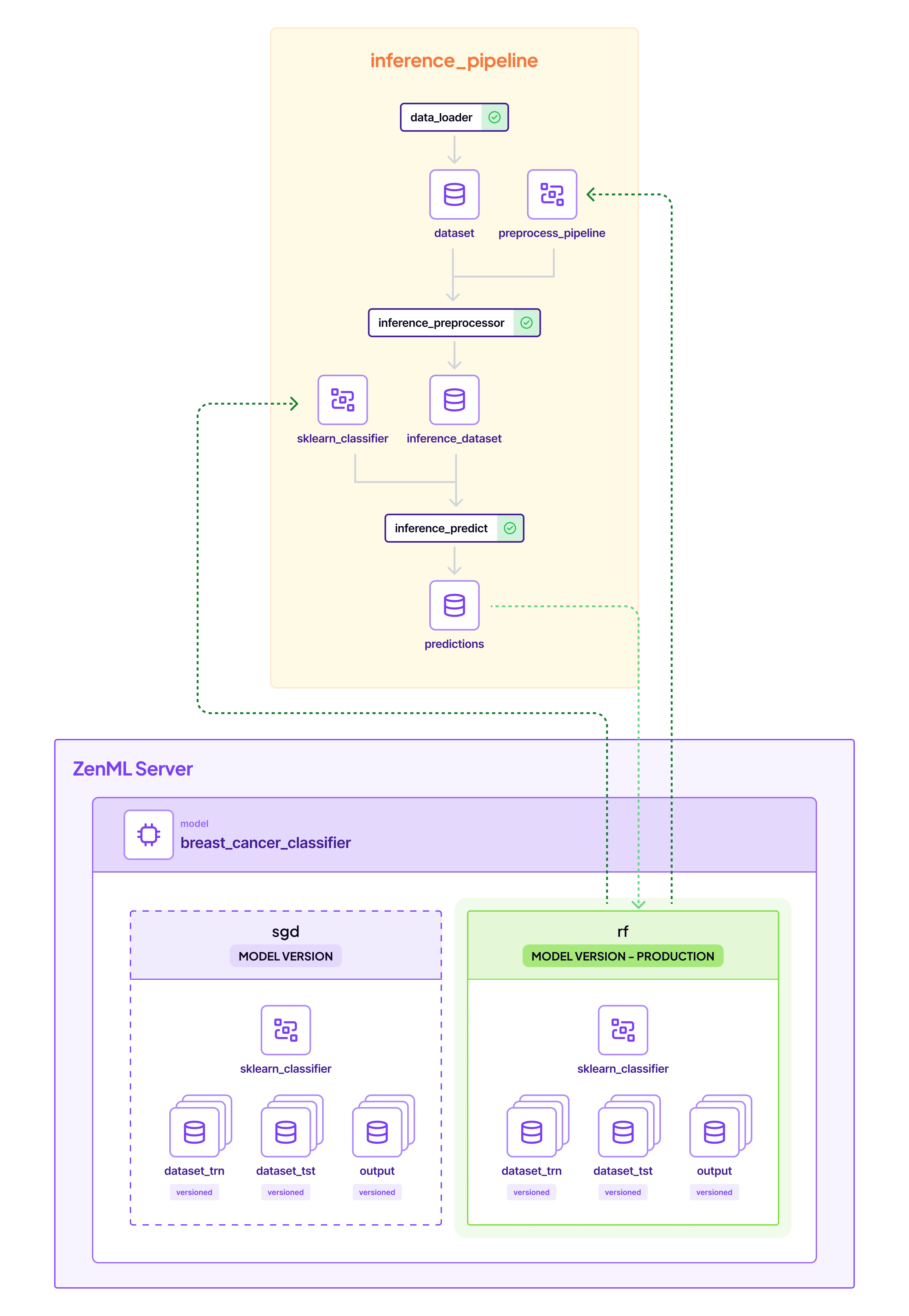

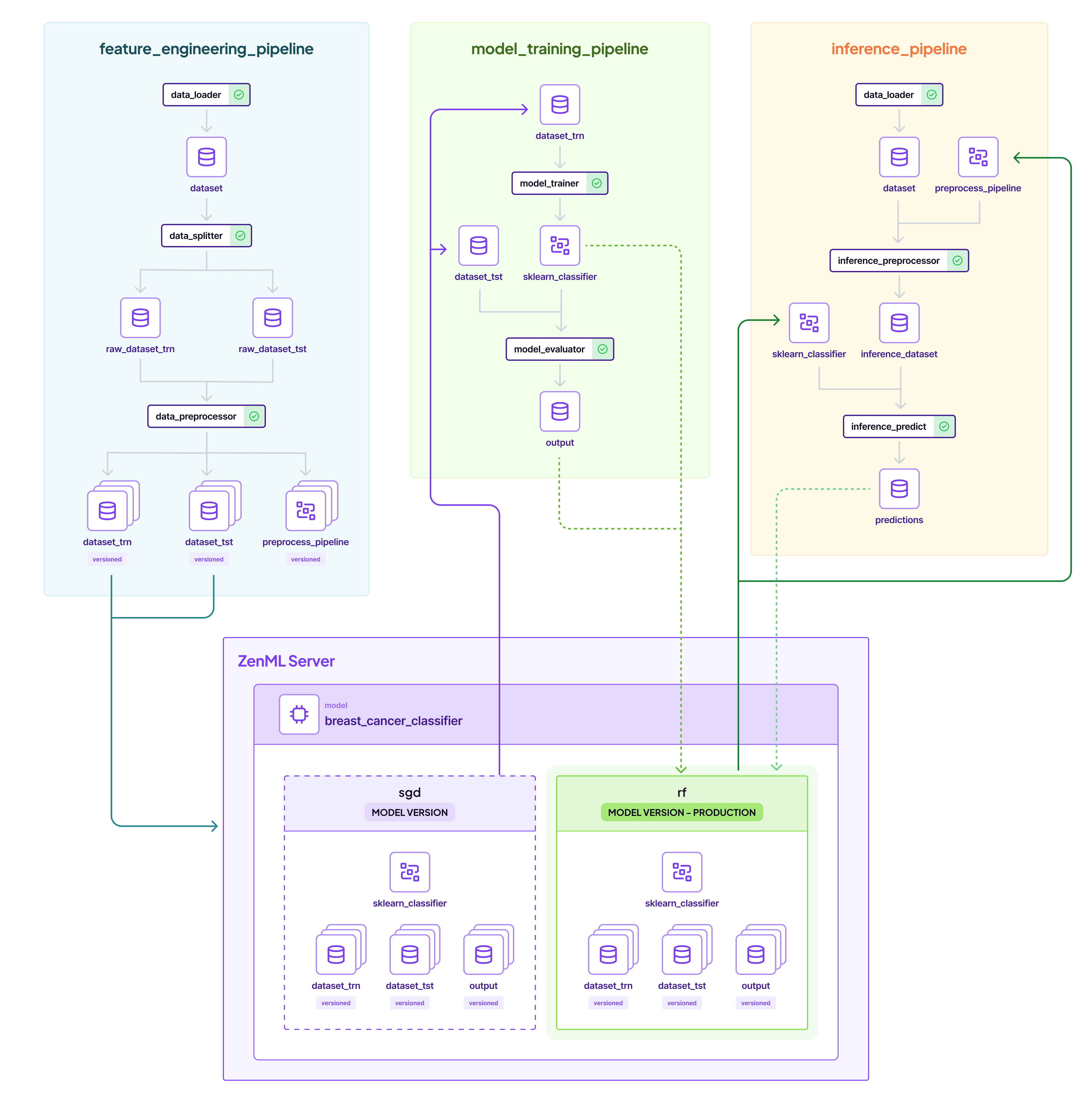

An automated model promotion system applies objective criteria to determine which models reach production. A Model Control Plane can serve as a model registry, version manager, and lifecycle tracker, enabling:

- Versioning: Tracking all promoted models within a pipeline with full lineage and metadata

- Promotion Logic: Enabling models to be promoted to staging, production, or custom stages based on objective criteria

- Deployment Integration: Defining deployments declaratively, ensuring reproducibility and auditability

Models vs. Model Control Plane: When we refer to a "Model" in the context of a Model Control Plane, we're talking about a unifying business concept that tracks all components related to solving a particular problem — not just the technical model file (weights and parameters) produced by training algorithms. A Model Control Plane organizes pipelines, artifacts, metadata, and configurations that together represent the full lifecycle of addressing a specific use case.

Here's an example step that handles promotion by evaluating a new model's metrics against the current production baseline:

The power of this approach lies in the automatic tracking and versioning of every model and pipeline run behind the scenes. This translates informal practices ("this model seems good enough") into codified quality standards that align with organizational expectations for evidence-based decision making.

One key advantage of using a dedicated Model Control Plane over traditional model registries is the decoupling from experiment tracking systems. This means you can log and manage models even if they weren't trained via a specific pipeline—perfect for integrating pre-trained or externally produced models.

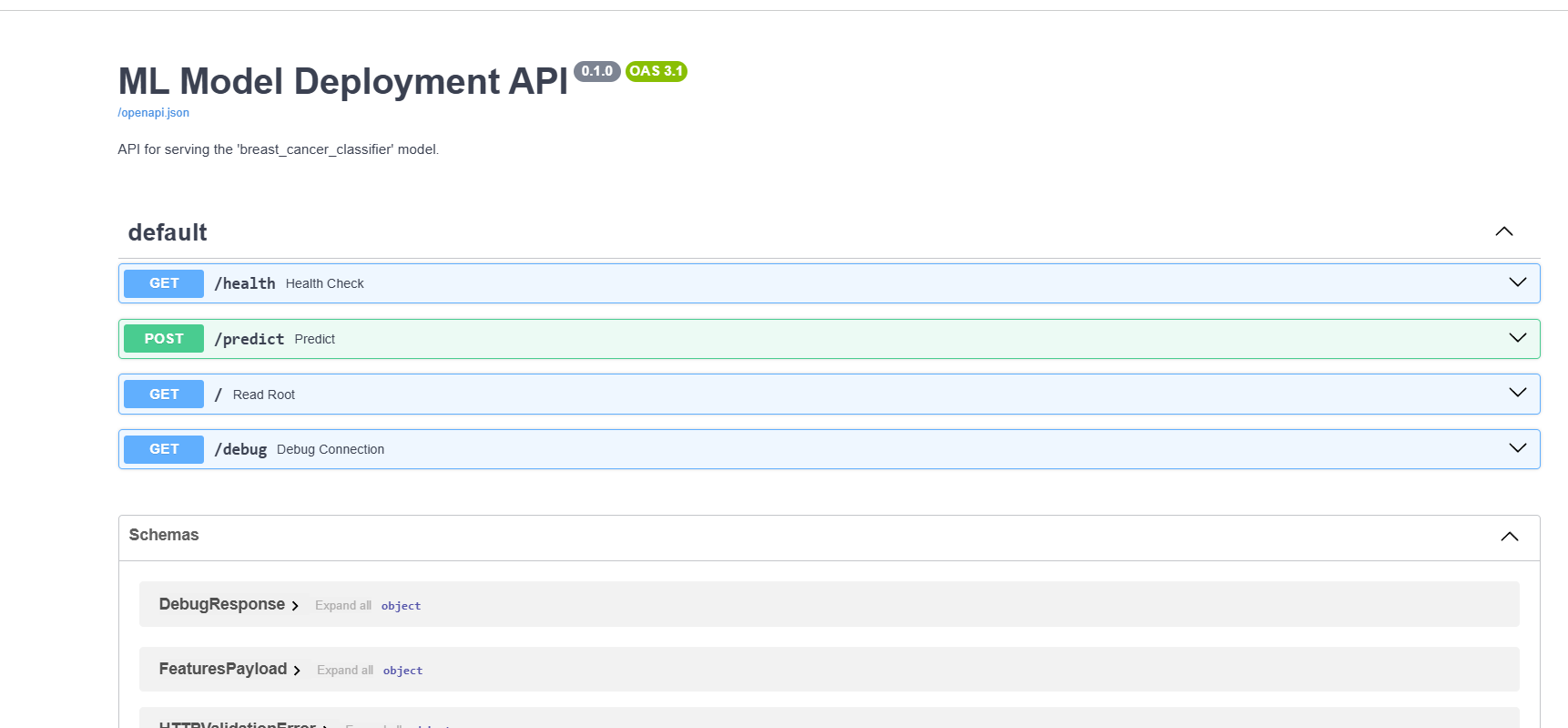

The API Interface: Where ML Meets Real-World Applications

Once models are deployed and promoted, they need to be accessible to end users through a reliable API. Here's how a well-designed FastAPI service ensures consistent preprocessing, proper error handling, and comprehensive logging:

This endpoint showcases several critical best practices:

- Bundled preprocessing: The service automatically applies the same transformations used during training

- Graceful degradation: If preprocessing fails, the service falls back to using raw input

- Type conversion: Handling the conversion of ML outputs to properly serializable types

- Comprehensive logging: Each prediction is logged with inputs, outputs, and metadata

The Real Value Proposition

What does this technical implementation mean for business stakeholders? Consider a domain expert using a deployed ML system in practice:

When they submit data for classification or prediction, they're interacting with a system where every component—from data preprocessing to model selection to prediction logging—has been systematically verified. The model they're using has been automatically vetted against minimum performance thresholds and compared to previous versions to ensure improvement.

Perhaps most importantly, if a prediction later needs review, the system provides complete traceability from that specific prediction back through the model version, its training data, validation metrics, and development code. This comprehensive lineage builds the foundation of trust that's essential for adoption.

Scaling Beyond Local Implementation

While we've focused on a relatively simple local deployment scenario, these principles transfer readily to more complex enterprise environments. The same patterns can be extended to target Kubernetes clusters or cloud-managed ML services. The model promotion system can incorporate additional domain-specific metrics like fairness assessments or robustness measures. The prediction API can be enhanced with explainability features.

The core insight remains consistent: treating deployment with the same systematic rigor applied to model development creates a foundation for reliable, traceable machine learning in production.

Next Steps

The journey from promising algorithm to trusted production tool is challenging, but with systematic deployment pipelines and evidence-based promotion systems, it's a journey that more ML projects can successfully complete.

Try It Yourself!

We built this ML deployment project to showcase practical, accessible MLOps and it's available in the zenml-projects repo on our GitHub. The instructions to try it out are in the README. We plan to add more deployment patterns going forward, so keep an eye out.

With ZenML, all of the pipelines are tracked in the dashboard. The pipeline overview shown in the screenshot above demonstrates how feature engineering, model training, and inference pipelines connect through the ZenML Server, with clear visibility of model versions and their promotion status. You can see how the entire ML lifecycle is automatically documented, giving you full traceability from data preparation through training to deployment.