MLOps on GCP: Different tools for different personas

As an ML engineer venturing into the world of MLOps, you've likely noticed the rapid evolution of Google Cloud's machine learning operations offerings. The array of services targeting various aspects of the ML lifecycle can be daunting, especially if you're working within the constraints of existing organizational infrastructure.

This article aims to demystify two of the most popular orchestration mechanisms in Google Cloud Platform (GCP) for MLOps: Cloud Composer and Vertex AI Pipelines. Orchestration serves as the backbone of MLOps activities, coordinating the complex interplay of data processing, model training, evaluation, and deployment.

We'll focus on:

- Cloud Composer: Google's managed version of Apache Airflow, a powerful and flexible workflow orchestration tool widely used in data engineering and MLOps.

- Vertex AI: A component of Google's unified ML platform, Vertex AI, designed specifically for building and managing machine learning workflows. Based on the open source Kubeflow project.

Both services offer robust orchestration capabilities, but choosing between them can be challenging. Let's delve into the specifics of each to help you make an informed decision for your MLOps setup.

Vertex AI (Kubeflow)

Vertex AI is tailored specifically for machine learning workflows. The most popular component is Vertex AI Pipelines, which leverages the Kubeflow Pipelines SDK, providing ML engineers with a familiar interface for constructing end-to-end ML pipelines. Vertex AI offers a comprehensive suite of services beyond pipelines, including the ability to schedule custom training jobs with GPUs. One of Vertex’s primary strengths lies in its native integration with other GCP AI services, offering built-in capabilities for model training, evaluation, and deployment. This tight integration can significantly streamline the MLOps process.

Cloud Composer (Airflow)

Cloud Composer, Google's managed version of Apache Airflow, builds upon the most widely adopted open-source tool for data pipeline orchestration. Originally designed with data engineers in mind, Airflow has evolved to accommodate a wide range of use cases, including machine learning workflows. Its popularity translates to a vast ecosystem of plugins, operators, and community support, making it a versatile choice for various pipeline needs.

Side-by-side comparison (Cloud Composer vs Vertex AI)

Here is a side-by-side comparison of using Cloud Composer vs Vertex AI

Cost Comparison

Perhaps one of the most significant differences from the table above is the fact that with Cloud Composer, organizations have a consistent cost for the cluster, while with Kubeflow you only pay for the workloads you run.

To see how this plays out, let's imagine a real-world concrete example that compares the two services purely in costs. A machine learning team needs to train and deploy computer vision models. They run GPU-intensive training jobs daily, along with data preprocessing and model evaluation steps. Let's compare the costs over a month.

Monthly Workflow:

- Daily data preprocessing (CPU-only, 1 hour)

- Model training (GPU-intensive, 4 hours)

- Model evaluation (CPU-only, 1 hour)

This is how the cost breakdown per month:

Disclaimer: This is a rough calculation based on the online pricing for Cloud Composer 2 and Vertex AI as of August 6, 2024. For most up-to-date information, please refer to the Google Cloud Price calculator

- Assumptions:

- 3 daily pipeline runs = 30 runs/month

- Cloud Composer environment runs 24/7 and NVIDIA_TESLA_T4 is optimally loaded into the environment only when required.

- Prices based on us-central1 region

From the offset, this example demonstrates how Vertex AI Pipelines can offer significant cost savings for ML-focused workflows, especially those involving GPU-intensive tasks and intermittent usage patterns. However, if your team is already using Airflow / Cloud Composer, then the base cost is often neglected, and then Cloud Composer is only $42 vs $65.53 for the same workload. Therefore, the answer to which is better really depends on the circumstances.

Using ZenML to bridge the gap between Airflow and Kubeflow

ZenML is an open-source MLOps framework designed to simplify the development, deployment, and management of machine learning workflows. It provides data scientists and ML engineers with a standardized approach to building production-ready machine learning pipelines.

One of ZenML's most powerful aspects is its ability to bridge different MLOps tools and platforms, particularly in the realm of workflow orchestration. A prime example of this is how ZenML facilitates the use of both Apache Airflow and Google Cloud's Vertex AI for machine learning purposes.

💡 Read how ZenML compares directly with Airflow and Kubeflow

ZenML plays a crucial role in bridging the gap between Airflow and Google Cloud's Vertex AI, allowing data scientists and ML engineers to leverage the strengths of both platforms for machine learning purposes. Here's how ZenML facilitates this integration:

- Unified Interface: ZenML provides a consistent interface for defining ML workflows using Python decorators like

@stepand@pipeline. This allows users to create pipelines that can be executed on either Airflow or Vertex AI without changing the core pipeline code. - Orchestration Flexibility: ZenML offers users the flexibility to seamlessly switch between Airflow and Vertex AI by simply adjusting their stack configuration. This versatility allows teams to deploy entire pipelines on either service, or adopt a hybrid approach. For instance, they can initiate a pipeline on Cloud Composer and offload GPU-intensive, ML-specific tasks to Vertex AI. This article will explore in depth how ZenML enables this "best of both worlds" strategy, combining the strengths of both platforms to optimize ML workflows.

- Infrastructure as Code: ZenML offers Terraform modules and a stack deployment wizard to quickly set up the necessary infrastructure on GCP, including Vertex AI components and Cloud Composer. This simplifies the process of getting started with both services.

- Artifact Management: ZenML's artifact store abstraction works seamlessly with Google Cloud Storage (GCS), allowing users to store and version ML artifacts regardless of whether they're using Airflow or Vertex AI for orchestration.

- Container Management: The framework integrates with Artifact Registry, ensuring that Docker images for pipeline steps are properly built and stored, which is crucial for both Airflow and Vertex AI execution.

- Authentication and Permissions: ZenML provides service connectors that simplify authentication and permission management for GCP resources, making it easier to set up and use both Airflow and Vertex AI securely.

- Local to Cloud Transition: Users can develop and test their pipelines locally with ZenML, then seamlessly transition to running them on Cloud Composer or Vertex AI in the cloud when ready for production, all without changing a line of code.

- Best Practices Implementation: ZenML enforces MLOps best practices like reproducibility and modularity, which benefits pipelines running on both Airflow and Vertex AI.

- Specialized ML Features: ZenML enhances Airflow's general-purpose orchestration by layering ML-specific features on top, bridging the gap between Airflow and Vertex AI's specialized machine learning capabilities. ZenML also simplifies the adoption of Vertex AI's ML-specific features for data scientists.

- Experimentation Support: ZenML allows users to experiment with both Airflow and Vertex AI, enabling them to compare performance, features, and costs before committing to one platform for production use.

By providing this bridge, ZenML enables teams to leverage the mature ecosystem and flexibility of Airflow alongside the ML-optimized features of Vertex AI, all while maintaining a consistent development experience and adhering to MLOps best practices.

Example ML Workflow: Optimized Cloud Spend with Cloud Composer and Vertex AI

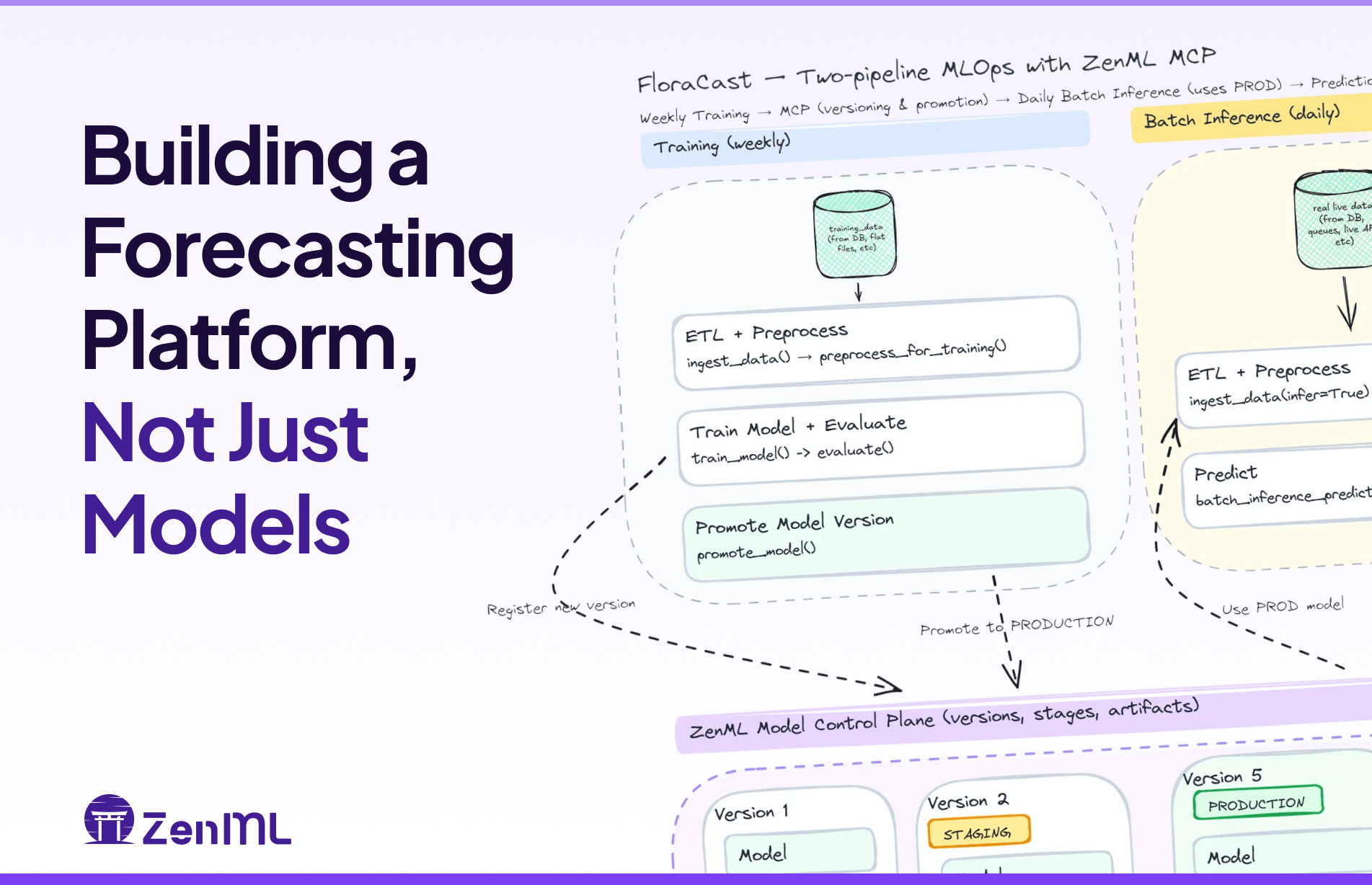

To illustrate how ZenML can leverage both Cloud Composer and Vertex AI, let's consider a practical example: the ECB interest rate prediction pipeline. This real-world scenario demonstrates how ZenML seamlessly integrates these platforms for effective ML workflow orchestration. The example showcases the seamless integration between Airflow and Vertex AI, leveraging the strengths of both platforms within the ZenML framework.

The workflow consists of three main pipelines:

- ETL Pipeline (Runs on Airflow)

- Feature Engineering Pipeline (Runs on Airflow)

- Model Training Pipeline (Runs on Airflow but runs a custom GPU-intense training job on Vertex AI)

💡 Note that all the above pipelines can be run completely on Vertex AI Pipelines as well

Best of Both Worlds: ZenML Pipeline on Airflow Orchestrator and Vertex AI Step Operator

As discussed earlier, organizations with existing data engineering teams or in-house Airflow expertise may prefer to leverage Cloud Composer for their daily tasks. In such cases, introducing a separate orchestration mechanism solely for machine learning might not be the most efficient approach.

With ZenML, you can run all steps within a pipeline on Airflow, while selectively outsourcing GPU-intensive workloads to Vertex AI using the ZenML step operator. This approach allows you to:

- Utilize Airflow for its robust pipeline orchestration capabilities without needing to implement ML-specific features.

- Leverage Vertex AI for GPU-intensive tasks, taking advantage of its ML-optimized infrastructure.

- Manage the entire workflow seamlessly through a single interface provided by ZenML.

This hybrid strategy enables teams to maintain their existing Airflow-based workflows while easily incorporating Vertex AI's powerful ML capabilities when needed. ZenML acts as the unifying layer, providing a cohesive experience that bridges the gap between general-purpose orchestration and specialized ML tasks.

All you have to do is to decorate the step you would like with a simple with some options that specify the infrastructure you need for:

Let's walk through how to set up and run these pipelines:

Setting Up Your Environment

1. Create and activate a Python virtual environment:

2. Install the required dependencies and ZenML integrations:

Configuring Your Stack

To run this pipeline on GCP, you'll need to set up a remote stack with the following components:

- A Cloud Composer orchestrator

- A Vertex AI step operator

- A GCS artifact store

- A GCP container registry

This is very simple using the ZenML GCP Stack Terraform module:

To learn more about the terraform script, read the ZenML documentation. or see the Terraform registry.

<aside>💡 Looking for a different way to register or provision a stack? Check out the in-browser stack deployment wizard, or the stack registration wizard, for a shortcut on how to deploy & register a cloud stack.

</aside>

Running the Pipelines

Step 1: ETL Pipeline

This pipeline extracts raw ECB interest rate data, transforms it, and loads the cleaned data into a BigQuery dataset.

Step 2: Feature Engineering Pipeline

This pipeline takes the transformed dataset and augments it with additional features. Again the result is stored in a BigQuery dataset.

Step 3: Model Training Pipeline

This pipeline uses the augmented dataset to train an XGBoost regression model and potentially promote it with the ZenML Model Control Plane.

By following these steps, you can see how ZenML orchestrates the entire workflow, seamlessly transitioning between Airflow for data processing (ETL and Feature Engineering) and Vertex AI for model training. This demonstrates ZenML's ability to bridge different tools and platforms, allowing you to leverage the strengths of both Airflow and Vertex AI in a single, cohesive ML pipeline.

After running the pipelines, you can explore the results in the ZenML UI, which provides a unified view of your entire workflow, regardless of whether steps were executed on Airflow or Vertex AI.

This example showcases how ZenML simplifies the process of building complex, multi-platform ML workflows, enabling data scientists and ML engineers to focus on their models and data rather than infrastructure management.

Using Gradio to Demo the Model

After training and deploying our model using ZenML, we can create an interactive demo using Gradio. This allows stakeholders to easily interact with the model and understand its predictions without needing to dive into the code.

Here's how we set up the Gradio demo:

First, we import the necessary libraries and initialize the ZenML client:

We fetch the latest production model from ZenML:

We define a prediction function that takes user inputs and returns the model's prediction:

Finally, we create and launch the Gradio interface:

This code creates a simple web interface where users can input the Deposit Facility Rate and Marginal Lending Facility Rate using sliders. When they submit their inputs, the model predicts the Main Refinancing Rate and displays the result.

By using Gradio in combination with ZenML, we've created a seamless pipeline from model training to interactive demo. This approach allows for rapid iteration and easy sharing of model results with non-technical stakeholders, showcasing the power of integrating MLOps practices with user-friendly visualization tools.

Conclusion: Bridging Cloud Composer and Vertex AI with ZenML

This ECB interest rate prediction project serves as an excellent case study in comparing and leveraging both Google Cloud Composer (Airflow) and Vertex AI for machine learning workflows. Through ZenML, we've seamlessly integrated these powerful platforms, allowing us to explore their strengths in different stages of our ML pipeline:

- Cloud Composer (Airflow) for ETL and Feature Engineering

- Vertex AI for Model Training and Deployment

Key insights from our exploration:

- Flexibility in Orchestration: ZenML allowed us to easily switch between Airflow and Vertex AI, demonstrating how teams can choose the best tool for each part of their ML workflow.

- Airflow's Strengths: For teams already familiar with Airflow or those with complex data processing needs, Cloud Composer provides a robust, general-purpose orchestration solution that extends beyond ML workflows.

- Vertex AI's ML Focus: Vertex AI shines in ML-specific tasks, offering features like automated model training, easy deployment, and built-in MLOps practices, making it an excellent choice for teams focused primarily on machine learning.

- Seamless Integration: ZenML's abstraction layer allowed us to use both platforms within a single project, showcasing its ability to create cohesive, multi-platform ML pipelines.

- Ease of Comparison: By using ZenML, we could easily compare the performance and features of both platforms without significant code changes, enabling data scientists to make informed decisions about their infrastructure.

In summary, this essay demonstrates that the choice between Cloud Composer and Vertex AI isn't necessarily an either-or decision. ZenML empowers teams to use both, leveraging the strengths of each platform where they fit best:

- If your team is already using Airflow and values its flexibility for diverse data workflows, Cloud Composer can be an excellent choice.

- For ML teams looking for specialized features like streamlined model deployments and ML-specific optimizations, Vertex AI offers a more tailored solution.

The beauty of using ZenML is that it accommodates both scenarios, allowing teams to start with one platform and easily transition to or incorporate the other as needs evolve. This flexibility ensures that your ML infrastructure can adapt to changing requirements without necessitating a complete overhaul of your workflows.

Ultimately, whether you choose Cloud Composer, Vertex AI, or a combination of both, ZenML provides the glue that binds these powerful tools into a cohesive, efficient, and scalable ML pipeline. It empowers data scientists and ML engineers to focus on building great models while leveraging the best of what Google Cloud has to offer for machine learning operations.

❓FAQ

- What are the main differences between using Cloud Composer (Airflow) and Vertex AI in this ZenML project?

- Cloud Composer (Airflow): Used for ETL and feature engineering pipelines, leveraging its strength in data processing and workflow orchestration.

- Vertex AI: Utilized for model training and deployment, taking advantage of its ML-specific features and scalable infrastructure.

- Can I use ZenML with both Cloud Composer and Vertex AI in the same project?

- Yes: This project demonstrates how ZenML can seamlessly integrate both platforms, allowing you to use Cloud Composer for data processing and Vertex AI for ML tasks within a single workflow.

- Which platform should I choose for my ML project: Cloud Composer or Vertex AI?

- If you're already familiar with Airflow or have complex data processing needs, Cloud Composer might be a better fit.

- For teams focused primarily on ML tasks and looking for specialized features like automated model training and easy deployment, Vertex AI is recommended.

- ZenML allows you to use both, so you can choose based on your specific requirements for each part of your pipeline.

- How does ZenML simplify the use of Cloud Composer and Vertex AI?

- ZenML provides a unified interface for defining pipelines that can run on either platform.

- It handles the complexities of infrastructure setup and configuration, allowing you to focus on your ML workflow.

- ZenML's abstraction layer enables easy switching between platforms without significant code changes.

- Is it possible to start with one platform and switch to the other later using ZenML?

- Yes: ZenML's modular design allows you to start with one platform and easily transition to or incorporate the other as your needs evolve, without overhauling your entire workflow.

- How does the Gradio demo fit into the ZenML workflow with Cloud Composer and Vertex AI?

- The Gradio demo showcases how ZenML can integrate the entire ML lifecycle, from data processing (Cloud Composer) and model training (Vertex AI) to creating an interactive demo for stakeholders.

- What are the key benefits of using ZenML in this ECB interest rate prediction project?

- Unified workflow across different GCP services

- Flexibility to use both Cloud Composer and Vertex AI

- Easy transition between development and production environments

- Streamlined MLOps practices like versioning and reproducibility

- Can this project setup be adapted for other financial modeling tasks?

- Yes: The pipeline structure and integration of Cloud Composer and Vertex AI through ZenML can be adapted for various financial modeling and prediction tasks, showcasing the versatility of this setup.