Building on the success of last week's blog post, Huggingface Model to Sagemaker Endpoint: Automating MLOps with ZenML we're excited to share a new development. Following the positive reception and community interest, we've created ZenML's End-to-End NLP Training, Promotion, and Deployment Project Template. This template is a practical tool for teams looking to integrate NLP solutions into their workflows.

In this post, we'll explore how this template simplifies the lifecycle of an NLP model, focusing on reproducibility, scalability, and adherence to best practices. Whether you're fine-tuning models for specific use cases or deploying them into production, ZenML's NLP project template provides a straightforward approach that aligns with MLOps workflows.

The Enduring Relevance of Traditional NLP

While LLMs have captured the spotlight, traditional NLP techniques continue to play a pivotal role in the field. Fine-tuning a model on domain-specific data or incorporating business logic into the NLP pipeline are areas where core NLP shines.

Moreover, the computational cost associated with training and deploying LLMs can be prohibitive for many organizations. Smaller, more efficient models that can be quickly adapted to specific tasks remain a practical choice for businesses looking to integrate NLP into their operations without breaking the bank.

ZenML's NLP Project Template: A Path to Production

ZenML's NLP project template is a testament to the fact that productionalizing NLP models doesn't have to be a Herculean task. The template is designed to streamline the entire lifecycle of an NLP model, from training to deployment, with a focus on reproducibility and scalability.

Quickstart to Production

Getting started with the ZenML NLP template is a breeze. With a simple command, you can initialize a new project that comes pre-configured with sensible defaults, allowing you to focus on the unique aspects of your NLP use case:

This command sets up a project structure that includes pipelines for training, promoting, and deploying your NLP model. Each pipeline is parameterized, offering flexibility and reusability, and can be customized to suite the specific requirements of a scenario involving the fine-tuning of an NLP model.

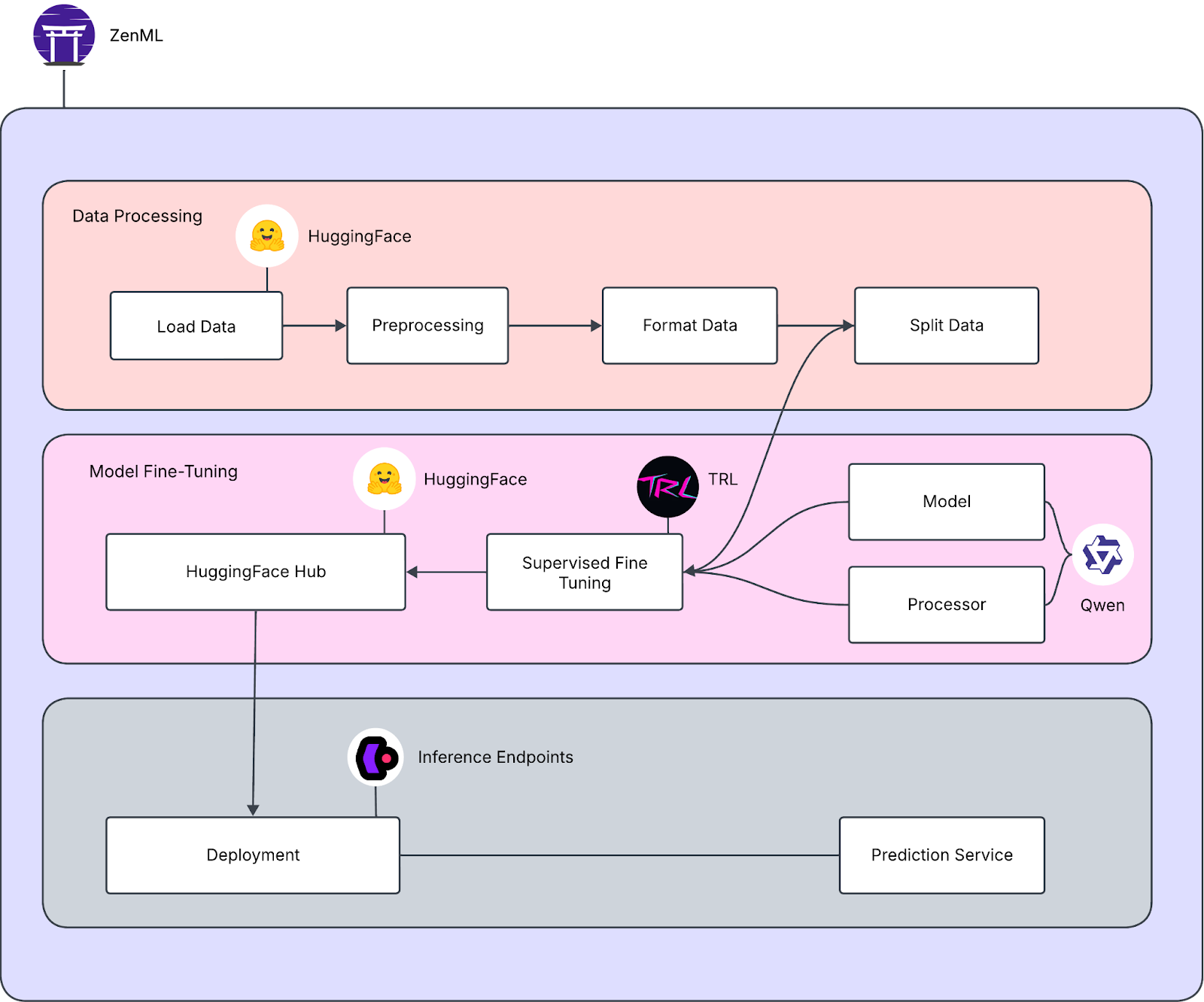

The template consists of three pipelines with the following high-level setup:

Training with Precision

The training pipeline is the heart of the NLP project. It handles data loading, tokenization, model training, and model registration. The steps are designed to ensure that only models that meet quality standards are promoted for inference. With integration to HuggingFace Datasets and Models, you can easily access pre-trained models and datasets, speeding up the development and experimentation process.

Smart Promotion Strategies

The promotion pipeline is responsible for advancing a model version to a specified stage based on metrics or simply promoting the latest version. This ensures that the best-performing models are the ones being deployed, aligning with the goal of delivering high-quality NLP solutions.

Seamless Deployment

Deployment is the final, crucial step that brings machine learning models into the hands of users. It's where the rubber meets the road, and your models start delivering value. ZenML's NLP project template offers a variety of deployment options, each tailored to different stages of development and production needs. Let's delve into the nuances of deploying locally, to HuggingFace Hub, and to SkyPilot, and how ZenML facilitates these processes.

Local Deployment: Testing in a Controlled Environment

Local deployment is often the first step in bringing your model to life. It allows you to test the model in a controlled environment, ensuring that it behaves as expected before scaling up to a more public or resource-intensive setting. With ZenML, local deployment is straightforward. The template includes a Gradio-based interface, which provides a user-friendly way to interact with your model right from your local machine. This is invaluable for quick iterations and demonstrations, as it gives immediate feedback on how the model performs with real input data.

Deploying locally also means you have full control over the environment, making it easier to debug and optimize your model. ZenML's template ensures that all necessary dependencies are in place, and with a simple command, your NLP model is up and running, ready for you to evaluate its predictions.

HuggingFace Hub Deployment: Sharing and Collaboration

HuggingFace Hub has emerged as a popular platform for sharing and collaborating on machine learning models, especially in the NLP domain. Deploying to HuggingFace Hub means your model becomes accessible to a wide community of researchers and practitioners, who can use and build upon your work.

ZenML simplifies the deployment to HuggingFace Hub by automating the process of pushing your model and associated Gradio interface to the platform. This means that with minimal configuration, your model is not only shared but also comes with an interactive space where users can test it out. The integration with HuggingFace Hub is a testament to ZenML's commitment to MLOps best practices, where sharing and collaboration are key to advancing the field.

SkyPilot Deployment: Scaling to the Cloud

When you're ready to scale your NLP model to handle real-world traffic, cloud deployment becomes essential. SkyPilot is a framework that simplifies managing and deploying pipelines and models to the cloud VMs, handling the complexities of infrastructure management so you can focus on your model's performance.

With ZenML's project template, deploying to SkyPilot is as seamless as the other options. The template includes the necessary configurations to launch your model on a virtual machine, taking advantage of cloud resources to serve predictions at scale. This is particularly useful for production environments where reliability, availability, and scalability are critical.

A Glimpse into a Generated Project

The beauty of the ZenML template lies in its configurability. The template parameters allow you to tailor the project to your needs. You can specify the project name, version, license, and even the technical name for your NLP product. This level of customization ensures that each project you generate with the template is unique and suited to the task at hand.

For instance, you can choose the dataset and model from HuggingFace's vast repository, select your target environment for deployments, and decide whether to deploy locally or to cloud providers like AWS or GCP. The template also allows you to enable or disable metric-based promotion and notifications on pipeline outcomes, giving you control over the model lifecycle management.

Imagine we've just initialized a new NLP project using the ZenML template. We've gone through the setup and customized our parameters, and now we have a fully functional project ready for training.

The project is ready to be used and can run as-is without any further code changes! You can try it right away by installing ZenML, the needed ZenML integration, and then calling the CLI included in the project. We also recommend that you start the ZenML UI locally to get a better sense of what is going on under the hood:

Our created stack will look like the following

When the pipelines are done running, you can check out the results in the ZenMLUI by following the link printed in the terminal (or you can go straight to the ZenML UI pipelines run page.

Next, you should:

- look at the CLI help to see what you can do with the project:

Embracing Best Practices with ZenML

ZenML's NLP project template stands as a testament to its readiness to support and enhance NLP use cases. This template not only aligns with best practices for ML pipelines but also serves as a testament to ZenML's adaptability to the nuances of natural language processing. Through a commitment to modularization, versioning, and linting, ZenML ensures that NLP solutions are not only easier to maintain but also readily adaptable for iterative improvements. The incorporation of notification hooks further underscores the platform's dedication to keeping users informed about the status of their ML workflows. This practical integration of best practices positions ZenML as a robust solution for NLP projects, providing a solid foundation for development and maintenance.

Interested in standardizing your MLOps workflows? ZenML Cloud is now available to all - get a managed ZenML server with important features such as RBAC and pipeline triggers. Book a demo with us now to learn how you can create your own MLOps pipelines today.