The LLM Fine-Tuning Challenge

Fine-tuning large language models (LLMs) for specific business domains has become essential for companies looking to leverage AI effectively. However, the process is fraught with challenges:

- Data preparation complexity: Converting raw datasets into formats required by fine-tuning platforms

- Reproducibility issues: Difficulty tracking experiments across multiple iterations

- Cost management: Without proper tracking, resources are wasted on duplicate runs

- Production deployment: Moving from successful experiments to reliable production systems

- Monitoring drift: Detecting when fine-tuned models need retraining

While foundation models like GPT-4 and Llama 3 offer impressive capabilities, they often lack domain-specific knowledge or tone alignment with company communications. Fine-tuning solves this problem, but implementing a reliable, production-grade fine-tuning workflow remains challenging for most teams.

The stakes are high: Gartner reports that 78% of organizations attempting to deploy LLMs struggle with inconsistent results and limited reproducibility, while McKinsey estimates potential value of $200-500 billion annually for companies that successfully implement domain-specific LLMs.

The ZenML + OpenPipe Solution

The ZenML-OpenPipe integration addresses these challenges by combining:

- ZenML's production-grade MLOps orchestration: Pipeline tracking, artifact lineage, and deployment automation

- OpenPipe's specialized LLM fine-tuning capabilities: Optimized training processes for various foundation models

This integration enables data scientists and ML engineers to:

- Build reproducible fine-tuning pipelines that can be shared across teams

- Track all datasets, experiments, and models with complete lineage

- Deploy fine-tuned models to production with confidence

- Schedule recurring fine-tuning jobs as data evolves

A key advantage of this integration is that OpenPipe automatically deploys your fine-tuned models as soon as training completes, making them immediately available via API. When you run the pipeline again with new data, your model is automatically retrained and redeployed, ensuring your production model always reflects your latest data.

Building a Fine-Tuning Pipeline

Let's examine the core components of an LLM fine-tuning pipeline built with ZenML and OpenPipe.

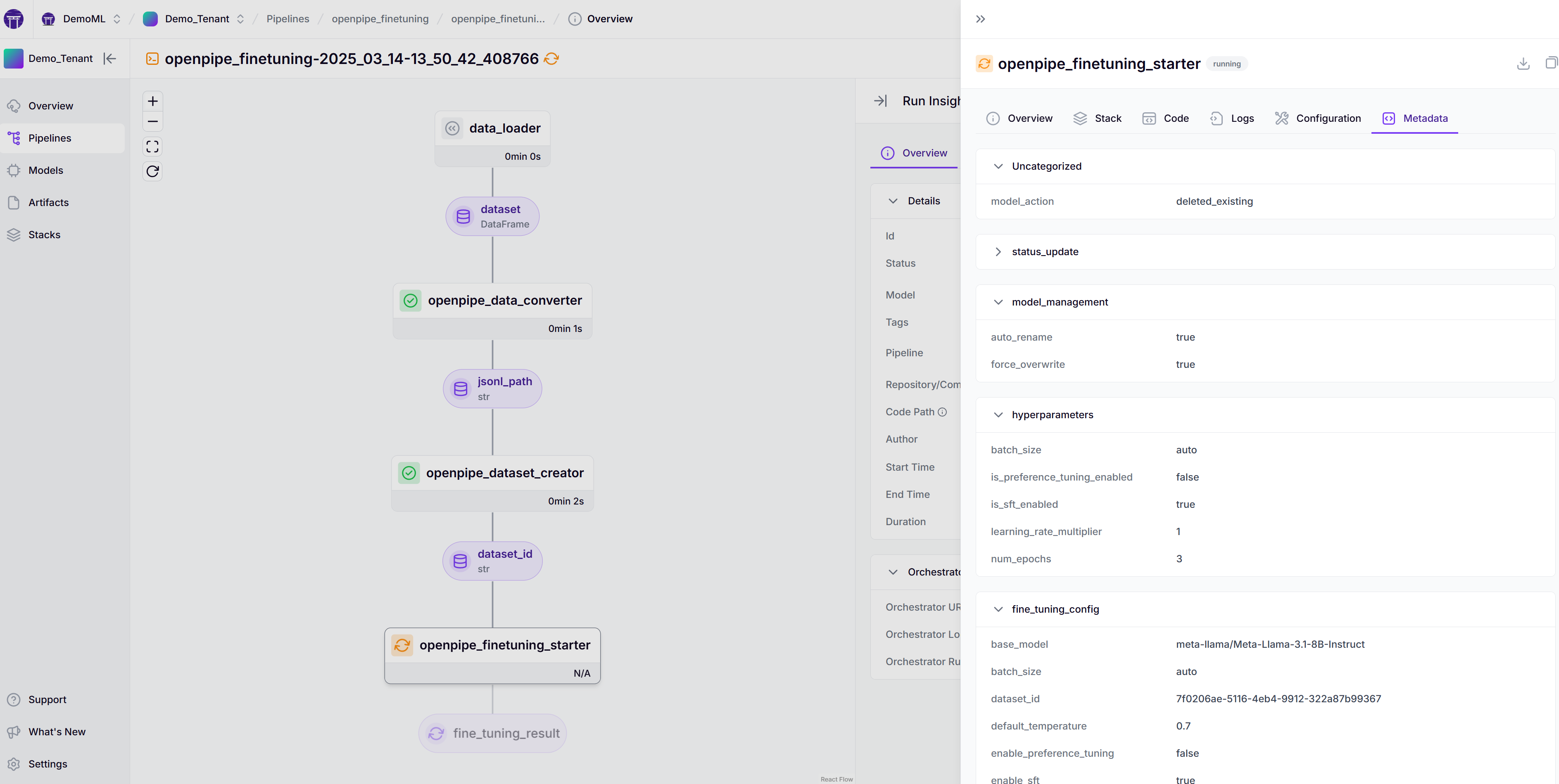

The Pipeline Architecture

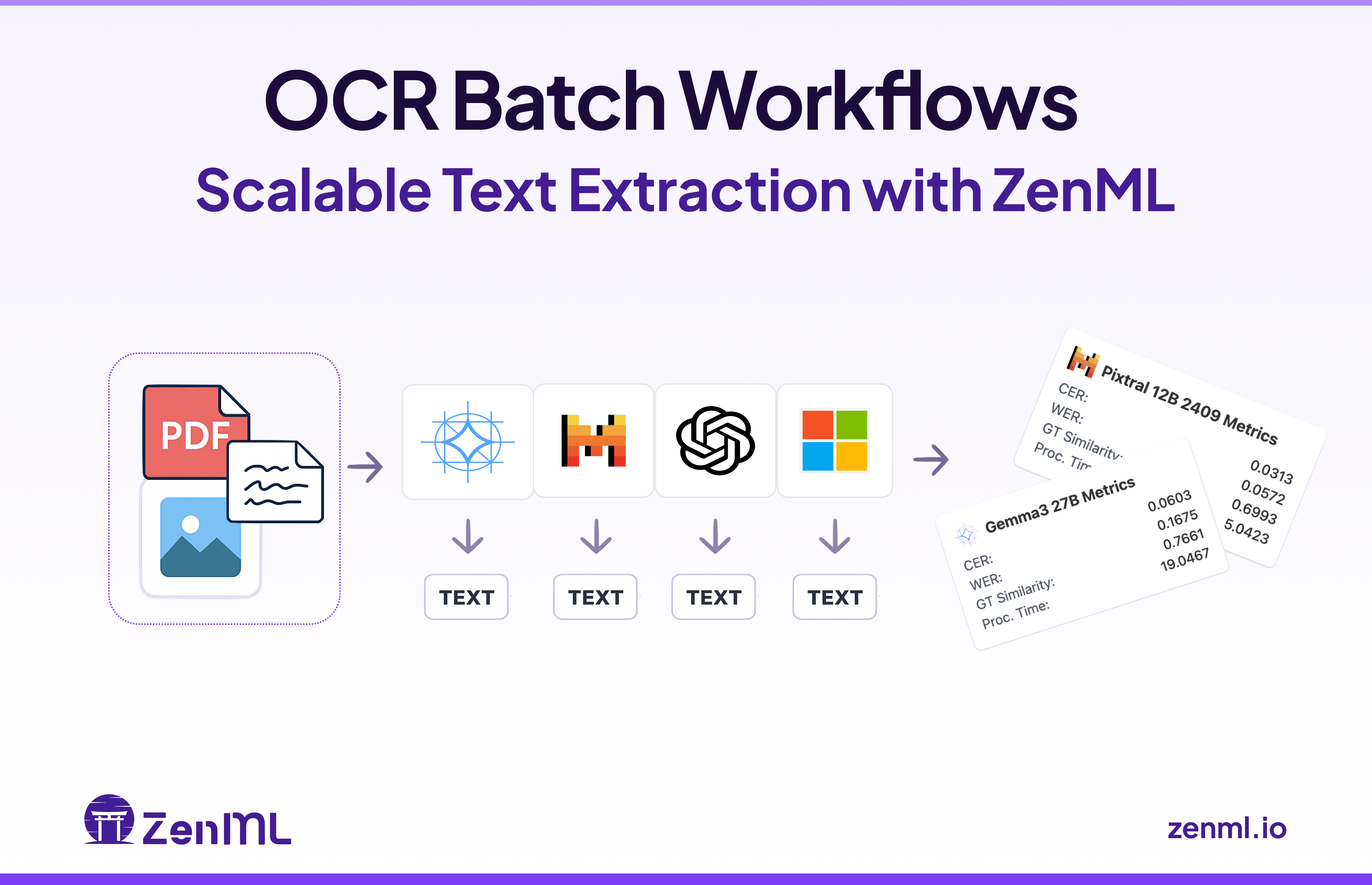

The integration provides a modular pipeline architecture that handles the end-to-end fine-tuning process:

This pipeline architecture provides several key advantages:

- Modular design: Each step handles a specific part of the workflow

- Parameter customization: Easily adjust training parameters based on your needs

- Comprehensive tracking: All artifacts and parameters are tracked automatically

- Reproducibility: Run the same pipeline with different parameters for comparison

For more details on building custom pipelines, check out the ZenML pipeline documentation.

Data Preparation Made Simple

One of the most challenging aspects of LLM fine-tuning is preparing the training data in the correct format. The openpipe_data_converter step handles this automatically:

This step transforms your raw data (CSV or DataFrame) into the specialized JSONL format required by OpenPipe, handling:

- Formatting training examples with proper role assignments

- Splitting data into training and testing sets

- Applying system prompts consistently

- Preserving important metadata for analysis

For more information on OpenPipe's data format requirements, visit their dataset documentation.

Practical Implementation

Let's walk through a real-world implementation of fine-tuning a customer support assistant model.

Case Study: RapidTech's Customer Support Automation

RapidTech, a fictional SaaS company, needed to fine-tune an LLM to handle customer support queries about their products. Their requirements included:

- Training on 5,000+ historical customer support conversations

- Regular retraining as new product features were released

- Consistent performance with company voice and product knowledge

- Cost-effective inference for high-volume support queries

Implementation Approach

We prepare the CSV training data with three key columns:

question: Customer queriesanswer: Agent responsesproduct: The product category (for metadata)

Next, we set up the ZenML pipeline to handle the end-to-end process:

The implementation follows OpenPipe's fine-tuning best practices while leveraging ZenML's orchestration capabilities.

Using Your Deployed Model

Once the fine-tuning process completes, OpenPipe automatically deploys your model and makes it available through their API. You can immediately start using your fine-tuned model with a simple curl request:

For Python applications, you can use the OpenPipe Python SDK, which follows the OpenAI SDK pattern for seamless integration:

This SDK approach is particularly useful for integrating with existing applications or services, and it supports tagging your requests for analytics and monitoring.

This immediate deployment capability eliminates the need for manual model deployment, allowing you to test and integrate your custom model right away.

Automated Redeployment with New Data

When product information changes or you collect new training data, simply run the pipeline again:

OpenPipe will automatically retrain and redeploy your model with the updated data, ensuring your production model always reflects the latest information and examples. This seamless redeployment process makes it easy to keep your models up to date without manual intervention.

Performance Metrics and Cost Analysis

The fine-tuned model demonstrates:

- Better domain knowledge: Correctly answering questions about RapidTech products

- More relevant responses: Directly addressing customer concerns

- Faster responses: No need for external knowledge lookups

- Lower cost: Smaller, fine-tuned model vs. larger foundation model

These results align with the broader industry findings documented in ZenML's LLMOps Database.

Data Management for Fine-Tuning

Effective data management is critical for successful LLM fine-tuning. The ZenML-OpenPipe integration makes this process painless through automated tracking.

Handling Different Data Sources

The pipeline supports multiple data sources:

Behind the scenes, the pipeline converts your data to the required JSONL format:

For more details on OpenPipe's JSONL format requirements, refer to their data preparation documentation.

Data Quality Monitoring

The pipeline logs detailed metadata about your training data:

This information is accessible in the ZenML dashboard, allowing you to:

- Track data distribution across different pipeline runs

- Compare model performance against data quality metrics

- Detect potential data drift requiring model retraining

Learn more about ZenML's metadata tracking in their documentation.

Monitoring and Reproducibility

One of the most powerful aspects of this integration is the comprehensive monitoring capabilities.

Tracking Fine-Tuning Progress

The openpipe_finetuning_starter step logs detailed information throughout the training process:

This provides a real-time view of:

- Training status and progress

- Model hyperparameters

- Error messages or warnings

- Time spent in each training phase

Continuous Model Improvement

A key advantage of the ZenML-OpenPipe integration is the ability to implement a continuous improvement cycle for your fine-tuned models:

- Initial training: Fine-tune a model on your current dataset

- Production deployment: Automatically handled by OpenPipe

- Feedback collection: Gather new examples and user interactions

- Dataset augmentation: Add new examples to your training data

- Retraining and redeployment: Run the pipeline again to update the model

With each iteration, both the dataset and model quality improve, creating a virtuous cycle of continuous enhancement. Since OpenPipe automatically redeploys your model with each training run, new capabilities are immediately available in production without additional deployment steps.

Check out OpenPipe's model monitoring documentation for more information about monitoring your fine-tuned models in production.

Deployment on ZenML Stacks

The integration can be deployed on any infrastructure stack supported by ZenML:

This enables powerful MLOps workflows:

- Automated retraining: Schedule regular fine-tuning runs

- Distributed execution: Run on Kubernetes, Vertex AI, or your preferred platform

- Scaling resources: Allocate appropriate compute for larger datasets

- Environment standardization: Ensure consistent execution environments

Key Takeaways

From implementing this integration with multiple customers, several key insights emerged:

- Fine-tuning beats prompting for domain specificity – Even the best prompts can't match a well-fine-tuned model's domain knowledge and consistency.

- Reproducibility is crucial – Tracking every parameter, dataset, and training run enables systematic improvement over time.

- Data quality matters more than quantity – Smaller, high-quality datasets often outperform larger but noisy datasets.

- Automation reduces operational overhead – Scheduled pipelines eliminate manual steps and ensure timely model updates.

- Metadata tracking enables governance – Complete lineage from data to model deployment satisfies compliance requirements.

- Automatic deployment accelerates time-to-value – With OpenPipe's instant deployment, fine-tuned models are immediately usable via API without additional DevOps work.

Next Steps

For teams looking to implement LLM fine-tuning in production, we recommend:

- Start with a clear use case where domain knowledge provides clear value

- Collect high-quality examples that represent your desired outputs

- Set up the ZenML-OpenPipe integration to manage your fine-tuning workflow

- Iterate based on metrics to improve model performance over time

- Implement continuous monitoring to detect when retraining is needed

With LLMs becoming a critical part of business applications, having a reliable, reproducible fine-tuning workflow isn't just convenient—it's essential for maintaining competitive advantage and ensuring consistent performance.

The combination of ZenML's robust MLOps capabilities and OpenPipe's fine-tuning expertise provides exactly that: a production-grade solution for teams serious about deploying custom LLMs at scale.

---

Ready to get started? Check out the ZenML-OpenPipe repository or join the ZenML Slack community for personalized guidance.