Effortlessly Run ZenML Pipelines in Isolated Docker Containers

Integrate ZenML with Docker to execute your ML pipelines in isolated environments locally. This integration simplifies debugging and ensures consistent execution across different systems.

Features with ZenML

- Isolated Pipeline Execution: Run each step of your ZenML pipeline in a separate Docker container, ensuring isolation and reproducibility.

- Local Debugging: Debug issues that occur when running pipelines in Docker containers without the need for remote infrastructure.

- Consistent Environments: Maintain consistent execution environments across different systems by leveraging Docker containers.

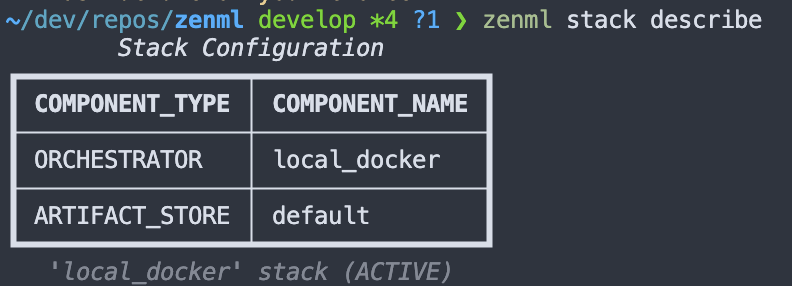

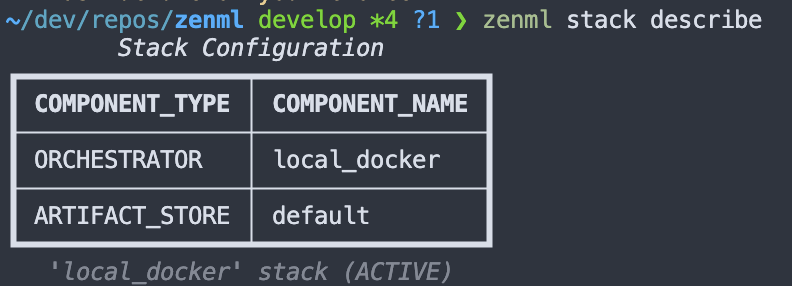

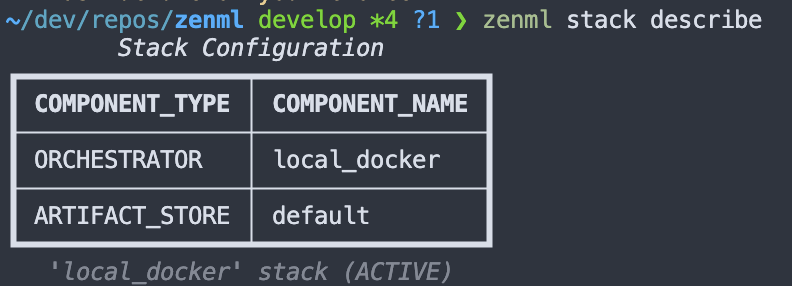

- Easy Setup: Seamlessly integrate Docker with ZenML using the built-in local Docker orchestrator.

Main Features

- Containerization of applications

- Isolation of processes and dependencies

- Portability across different systems

- Efficient resource utilization

- Reproducibility of environments

How to use ZenML with

Docker

from zenml import step, pipeline

from zenml.orchestrators.local_docker.local_docker_orchestrator import (

LocalDockerOrchestratorSettings,

)

@step

def preprocess_data():

# Preprocessing logic here

pass

@step

def train_model():

# Model training logic here

pass

settings = {

"orchestrator.local_docker": LocalDockerOrchestratorSettings(

run_args={"cpu_count": 2}

)

}

@pipeline(settings=settings)

def ml_pipeline():

data = preprocess_data()

train_model(data)

if __name__ == "__main__":

ml_pipeline()

This code example demonstrates how to create a simple ZenML pipeline that runs each step in a Docker container using the local Docker orchestrator. The pipeline consists of two steps: preprocess_data and train_model. The LocalDockerOrchestratorSettings is used to specify additional configuration, such as the CPU count for the Docker containers. Finally, the pipeline is executed by calling ml_pipeline().

Additional Resources

Read the full documentation on the Local Docker Orchestrator

Learn how to enable GPU support with the Local Docker Orchestrator

Explore the ZenML SDK documentation for LocalDockerOrchestrator